|

|

def | __init__ (self, schema=None) |

| |

|

def | schema (self) |

| |

| def | setup_ex (self, init_net, finish_net) |

| |

|

def | read_ex (self, local_init_net, local_finish_net) |

| |

|

def | read_record_ex (self, local_init_net, local_finish_net) |

| |

| def | read (self, read_net) |

| |

| def | reset (self, net) |

| |

|

def | read_record (self, read_net) |

| |

| def | execution_step (self, reader_net_name=None, external_should_stop=None) |

| |

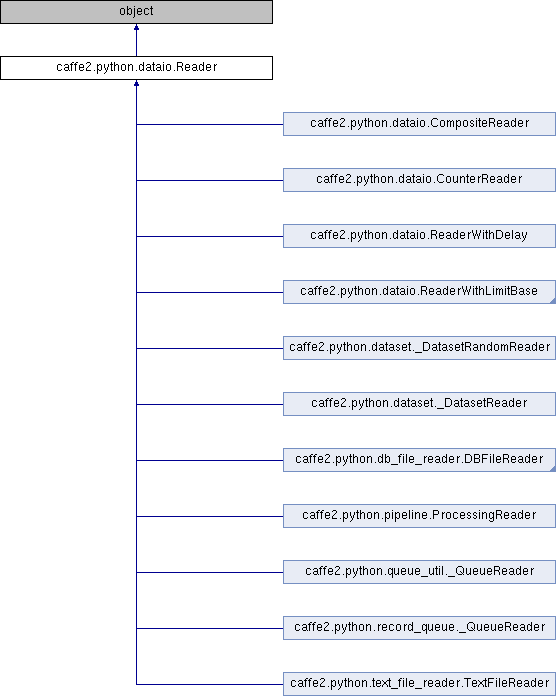

Reader is an abstract class to be implemented in order to provide

operations capable of iterating through a dataset or stream of data.

A Reader must implement at least one operation, `read`, which

adds operations to a net that read the next batch of data. Readers can

optionally support the `reset` operation, which is useful when multiple

passes over the data are required.

Definition at line 29 of file dataio.py.

| def caffe2.python.dataio.Reader.execution_step |

( |

|

self, |

|

|

|

reader_net_name = None, |

|

|

|

external_should_stop = None |

|

) |

| |

Create an execution step with a net containing read operators.

The execution step will contain a `stop_blob` that knows how to stop

the execution loop when end of data was reached.

E.g.:

read_step, fields = reader.execution_step()

consume_net = core.Net('consume')

consume_net.Print(fields[0], [])

p = core.Plan('reader')

p.AddStep(read_step.AddNet(consume_net))

core.RunPlan(p)

Args:

reader_net_name: (optional) the name of the reader_net to be

created. The execution step will

be named accordingly.

Returns:

A tuple (read_step, fields), with:

read_step: A newly created execution step containing a net with

read operations. The step will have `stop_blob` set,

in order to stop the loop on end of data.

fields: A tuple of BlobReference containing the latest batch

of data that was read.

Definition at line 107 of file dataio.py.

| def caffe2.python.dataio.Reader.read |

( |

|

self, |

|

|

|

read_net |

|

) |

| |

Append operations to read_net that will read a batch from the

underlying data soruce.

Operations added to `read_net` must be thread safe and atomic, that is,

it should be possible to clone `read_net` and run multiple instances of

it in parallel.

Args:

read_net: the net that will be appended with read operations

Returns:

A tuple (should_stop, fields), with:

should_stop: BlobReference pointing to a boolean scalar

blob that indicates whether the read operation

was succesfull or whether the end of data has

been reached.

fields: A tuple of BlobReference containing the latest batch

of data that was read.

Definition at line 71 of file dataio.py.

1.8.11

1.8.11