|

| def | assertDeviceChecks (self, device_options, op, inputs, outputs_to_check, input_device_options=None, threshold=0.01) |

| |

| def | assertGradientChecks (self, device_option, op, inputs, outputs_to_check, outputs_with_grads, grad_ops=None, threshold=0.005, stepsize=0.05, input_device_options=None) |

| |

| def | assertReferenceChecks (self, device_option, op, inputs, reference, input_device_options=None, threshold=1e-4, output_to_grad=None, grad_reference=None, atol=None, outputs_to_check=None) |

| |

|

def | assertValidationChecks (self, device_option, op, inputs, validator, input_device_options=None, as_kwargs=True, init_net=None) |

| |

|

def | assertRunOpRaises (self, device_option, op, inputs, input_device_options=None, exception=(Exception,), regexp=None) |

| |

|

def | setUpClass (cls) |

| |

|

def | setUp (self) |

| |

|

def | tearDown (self) |

| |

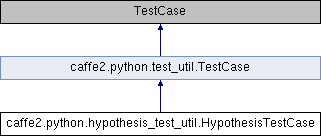

A unittest.TestCase subclass with some helper functions for

utilizing the `hypothesis` (hypothesis.readthedocs.io) library.

Definition at line 381 of file hypothesis_test_util.py.

| def caffe2.python.hypothesis_test_util.HypothesisTestCase.assertDeviceChecks |

( |

|

self, |

|

|

|

device_options, |

|

|

|

op, |

|

|

|

inputs, |

|

|

|

outputs_to_check, |

|

|

|

input_device_options = None, |

|

|

|

threshold = 0.01 |

|

) |

| |

Asserts that the operator computes the same outputs, regardless of

which device it is executed on.

Useful for checking the consistency of GPU and CPU

implementations of operators.

Usage example:

@given(inputs=hu.tensors(n=2), in_place=st.booleans(), **hu.gcs)

def test_sum(self, inputs, in_place, gc, dc):

op = core.CreateOperator("Sum", ["X1", "X2"],

["Y" if not in_place else "X1"])

X1, X2 = inputs

self.assertDeviceChecks(dc, op, [X1, X2], [0])

Definition at line 395 of file hypothesis_test_util.py.

| def caffe2.python.hypothesis_test_util.HypothesisTestCase.assertGradientChecks |

( |

|

self, |

|

|

|

device_option, |

|

|

|

op, |

|

|

|

inputs, |

|

|

|

outputs_to_check, |

|

|

|

outputs_with_grads, |

|

|

|

grad_ops = None, |

|

|

|

threshold = 0.005, |

|

|

|

stepsize = 0.05, |

|

|

|

input_device_options = None |

|

) |

| |

Implements a standard numerical gradient checker for the operator

in question.

Useful for checking the consistency of the forward and

backward implementations of operators.

Usage example:

@given(inputs=hu.tensors(n=2), in_place=st.booleans(), **hu.gcs)

def test_sum(self, inputs, in_place, gc, dc):

op = core.CreateOperator("Sum", ["X1", "X2"],

["Y" if not in_place else "X1"])

X1, X2 = inputs

self.assertGradientChecks(gc, op, [X1, X2], 0, [0])

Definition at line 431 of file hypothesis_test_util.py.

| def caffe2.python.hypothesis_test_util.HypothesisTestCase.assertReferenceChecks |

( |

|

self, |

|

|

|

device_option, |

|

|

|

op, |

|

|

|

inputs, |

|

|

|

reference, |

|

|

|

input_device_options = None, |

|

|

|

threshold = 1e-4, |

|

|

|

output_to_grad = None, |

|

|

|

grad_reference = None, |

|

|

|

atol = None, |

|

|

|

outputs_to_check = None |

|

) |

| |

This runs the reference Python function implementation

(effectively calling `reference(*inputs)`, and compares that

to the output of output, with an absolute/relative tolerance

given by the `threshold` parameter.

Useful for checking the implementation matches the Python

(typically NumPy) implementation of the same functionality.

Usage example:

@given(X=hu.tensor(), inplace=st.booleans(), **hu.gcs)

def test_softsign(self, X, inplace, gc, dc):

op = core.CreateOperator(

"Softsign", ["X"], ["X" if inplace else "Y"])

def softsign(X):

return (X / (1 + np.abs(X)),)

self.assertReferenceChecks(gc, op, [X], softsign)

Definition at line 568 of file hypothesis_test_util.py.

The documentation for this class was generated from the following file:

Public Member Functions inherited from caffe2.python.test_util.TestCase

Public Member Functions inherited from caffe2.python.test_util.TestCase Public Attributes inherited from caffe2.python.test_util.TestCase

Public Attributes inherited from caffe2.python.test_util.TestCase 1.8.11

1.8.11