Public Member Functions | |

| def | __init__ (self, optimizer, lr_lambda, last_epoch=-1) |

| def | state_dict (self) |

| def | load_state_dict (self, state_dict) |

| def | get_lr (self) |

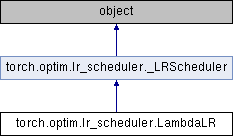

Public Member Functions inherited from torch.optim.lr_scheduler._LRScheduler Public Member Functions inherited from torch.optim.lr_scheduler._LRScheduler | |

| def | __init__ (self, optimizer, last_epoch=-1) |

| def | state_dict (self) |

| def | load_state_dict (self, state_dict) |

| def | get_lr (self) |

| def | step (self, epoch=None) |

Public Attributes | |

| optimizer | |

| lr_lambdas | |

| last_epoch | |

Public Attributes inherited from torch.optim.lr_scheduler._LRScheduler Public Attributes inherited from torch.optim.lr_scheduler._LRScheduler | |

| optimizer | |

| base_lrs | |

| last_epoch | |

Sets the learning rate of each parameter group to the initial lr

times a given function. When last_epoch=-1, sets initial lr as lr.

Args:

optimizer (Optimizer): Wrapped optimizer.

lr_lambda (function or list): A function which computes a multiplicative

factor given an integer parameter epoch, or a list of such

functions, one for each group in optimizer.param_groups.

last_epoch (int): The index of last epoch. Default: -1.

Example:

>>> # Assuming optimizer has two groups.

>>> lambda1 = lambda epoch: epoch // 30

>>> lambda2 = lambda epoch: 0.95 ** epoch

>>> scheduler = LambdaLR(optimizer, lr_lambda=[lambda1, lambda2])

>>> for epoch in range(100):

>>> scheduler.step()

>>> train(...)

>>> validate(...)

Definition at line 56 of file lr_scheduler.py.

| def torch.optim.lr_scheduler.LambdaLR.load_state_dict | ( | self, | |

| state_dict | |||

| ) |

Loads the schedulers state.

Arguments:

state_dict (dict): scheduler state. Should be an object returned

from a call to :meth:`state_dict`.

Definition at line 107 of file lr_scheduler.py.

| def torch.optim.lr_scheduler.LambdaLR.state_dict | ( | self | ) |

Returns the state of the scheduler as a :class:`dict`. It contains an entry for every variable in self.__dict__ which is not the optimizer. The learning rate lambda functions will only be saved if they are callable objects and not if they are functions or lambdas.

Definition at line 90 of file lr_scheduler.py.

1.8.11

1.8.11