Decays the learning rate of each parameter group by gamma once the

number of epoch reaches one of the milestones. Notice that such decay can

happen simultaneously with other changes to the learning rate from outside

this scheduler. When last_epoch=-1, sets initial lr as lr.

Args:

optimizer (Optimizer): Wrapped optimizer.

milestones (list): List of epoch indices. Must be increasing.

gamma (float): Multiplicative factor of learning rate decay.

Default: 0.1.

last_epoch (int): The index of last epoch. Default: -1.

Example:

>>> # Assuming optimizer uses lr = 0.05 for all groups

>>> # lr = 0.05 if epoch < 30

>>> # lr = 0.005 if 30 <= epoch < 80

>>> # lr = 0.0005 if epoch >= 80

>>> scheduler = MultiStepLR(optimizer, milestones=[30,80], gamma=0.1)

>>> for epoch in range(100):

>>> scheduler.step()

>>> train(...)

>>> validate(...)

Definition at line 164 of file lr_scheduler.py.

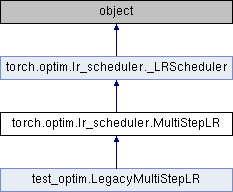

Public Member Functions inherited from torch.optim.lr_scheduler._LRScheduler

Public Member Functions inherited from torch.optim.lr_scheduler._LRScheduler Public Attributes inherited from torch.optim.lr_scheduler._LRScheduler

Public Attributes inherited from torch.optim.lr_scheduler._LRScheduler 1.8.11

1.8.11