Decays the learning rate of each parameter group by gamma every

step_size epochs. Notice that such decay can happen simultaneously with

other changes to the learning rate from outside this scheduler. When

last_epoch=-1, sets initial lr as lr.

Args:

optimizer (Optimizer): Wrapped optimizer.

step_size (int): Period of learning rate decay.

gamma (float): Multiplicative factor of learning rate decay.

Default: 0.1.

last_epoch (int): The index of last epoch. Default: -1.

Example:

>>> # Assuming optimizer uses lr = 0.05 for all groups

>>> # lr = 0.05 if epoch < 30

>>> # lr = 0.005 if 30 <= epoch < 60

>>> # lr = 0.0005 if 60 <= epoch < 90

>>> # ...

>>> scheduler = StepLR(optimizer, step_size=30, gamma=0.1)

>>> for epoch in range(100):

>>> scheduler.step()

>>> train(...)

>>> validate(...)

Definition at line 126 of file lr_scheduler.py.

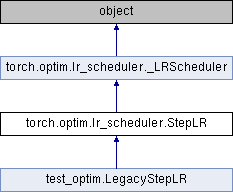

Public Member Functions inherited from torch.optim.lr_scheduler._LRScheduler

Public Member Functions inherited from torch.optim.lr_scheduler._LRScheduler Public Attributes inherited from torch.optim.lr_scheduler._LRScheduler

Public Attributes inherited from torch.optim.lr_scheduler._LRScheduler 1.8.11

1.8.11