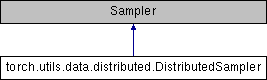

Sampler that restricts data loading to a subset of the dataset.

It is especially useful in conjunction with

:class:`torch.nn.parallel.DistributedDataParallel`. In such case, each

process can pass a DistributedSampler instance as a DataLoader sampler,

and load a subset of the original dataset that is exclusive to it.

.. note::

Dataset is assumed to be of constant size.

Arguments:

dataset: Dataset used for sampling.

num_replicas (optional): Number of processes participating in

distributed training.

rank (optional): Rank of the current process within num_replicas.

Definition at line 7 of file distributed.py.

1.8.11

1.8.11