Optimizer that requires the loss function to be supplied to the step() function, as it may evaluate the loss function multiple times per step.

More...

#include <optimizer.h>

Public Types | |

| using | LossClosure = std::function< Tensor()> |

| A loss function closure, which is expected to return the loss value. | |

Public Member Functions | |

| virtual Tensor | step (LossClosure closure)=0 |

Public Member Functions inherited from torch::optim::detail::OptimizerBase Public Member Functions inherited from torch::optim::detail::OptimizerBase | |

| OptimizerBase (std::vector< Tensor > parameters) | |

Constructs the Optimizer from a vector of parameters. | |

| void | add_parameters (const std::vector< Tensor > ¶meters) |

| Adds the given vector of parameters to the optimizer's parameter list. | |

| virtual void | zero_grad () |

| Zeros out the gradients of all parameters. | |

| const std::vector< Tensor > & | parameters () const noexcept |

| Provides a const reference to the parameters this optimizer holds. | |

| std::vector< Tensor > & | parameters () noexcept |

| Provides a reference to the parameters this optimizer holds. | |

| size_t | size () const noexcept |

| Returns the number of parameters referenced by the optimizer. | |

| virtual void | save (serialize::OutputArchive &archive) const |

Serializes the optimizer state into the given archive. | |

| virtual void | load (serialize::InputArchive &archive) |

Deserializes the optimizer state from the given archive. | |

Additional Inherited Members | |

Protected Member Functions inherited from torch::optim::detail::OptimizerBase Protected Member Functions inherited from torch::optim::detail::OptimizerBase | |

| template<typename T > | |

| T & | buffer_at (std::vector< T > &buffers, size_t index) |

| Accesses a buffer at the given index. More... | |

| Tensor & | buffer_at (std::vector< Tensor > &buffers, size_t index) |

| Accesses a buffer at the given index, converts it to the type of the parameter at the corresponding index (a no-op if they match). More... | |

Protected Attributes inherited from torch::optim::detail::OptimizerBase Protected Attributes inherited from torch::optim::detail::OptimizerBase | |

| std::vector< Tensor > | parameters_ |

| The parameters this optimizer optimizes. | |

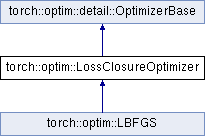

Optimizer that requires the loss function to be supplied to the step() function, as it may evaluate the loss function multiple times per step.

Examples of such algorithms are conjugate gradient and LBFGS. The step() function also returns the loss value.

Definition at line 110 of file optimizer.h.

1.8.11

1.8.11