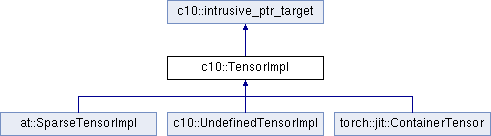

The low-level representation of a tensor, which contains a pointer to a storage (which contains the actual data) and metadata (e.g., sizes and strides) describing this particular view of the data as a tensor. More...

#include <TensorImpl.h>

Public Member Functions | |

| TensorImpl (TensorTypeId type_id, const caffe2::TypeMeta &data_type, Allocator *allocator, bool is_variable) | |

| Construct a 1-dim 0-size tensor with the given settings. More... | |

| TensorImpl (Storage &&storage, TensorTypeId type_id, bool is_variable) | |

| Construct a 1-dim 0-size tensor backed by the given storage. | |

| TensorImpl (const TensorImpl &)=delete | |

| TensorImpl & | operator= (const TensorImpl &)=delete |

| TensorImpl (TensorImpl &&)=default | |

| TensorImpl & | operator= (TensorImpl &&)=default |

| virtual void | release_resources () override |

| Release (decref) storage, and any other external allocations. More... | |

| TensorTypeId | type_id () const |

| Return the TensorTypeId corresponding to this Tensor. More... | |

| virtual IntArrayRef | sizes () const |

| Return a reference to the sizes of this tensor. More... | |

| virtual IntArrayRef | strides () const |

| Return a reference to the strides of this tensor. More... | |

| virtual int64_t | dim () const |

| Return the number of dimensions of this tensor. More... | |

| virtual bool | has_storage () const |

| True if this tensor has storage. More... | |

| virtual const Storage & | storage () const |

| Return the underlying storage of a Tensor. More... | |

| virtual int64_t | numel () const |

| The number of elements in a tensor. More... | |

| virtual bool | is_contiguous () const |

| Whether or not a tensor is laid out in contiguous memory. More... | |

| bool | is_sparse () const |

| bool | is_cuda () const |

| bool | is_hip () const |

| int64_t | get_device () const |

| Device | device () const |

| Layout | layout () const |

| virtual TensorImpl * | maybe_zero_dim (bool condition_when_zero_dim) |

If condition_when_zero_dim is true, and the tensor is a 1-dim, 1-size tensor, reshape the tensor into a 0-dim tensor (scalar). More... | |

| bool | is_wrapped_number () const |

| True if a tensor was auto-wrapped from a C++ or Python number. More... | |

| void | set_wrapped_number (bool value) |

| Set whether or not a tensor was auto-wrapped from a C++ or Python number. More... | |

| void | set_requires_grad (bool requires_grad) |

| Set whether or not a tensor requires gradient. More... | |

| bool | requires_grad () const |

| True if a tensor requires gradient. More... | |

| at::Tensor & | grad () |

| Return a mutable reference to the gradient. More... | |

| const at::Tensor & | grad () const |

| Return the accumulated gradient of a tensor. More... | |

| template<typename T > | |

| T * | data () const |

| Return a typed data pointer to the actual data which this tensor refers to. More... | |

| void * | data () const |

| Return a void* data pointer to the actual data which this tensor refers to. More... | |

| virtual void * | slow_data () const |

| This is just like data(), except it works with Variables. More... | |

| template<typename T > | |

| T * | unsafe_data () const |

| Like data<T>(), but performs no checks. More... | |

| const caffe2::TypeMeta & | dtype () const |

| Returns the TypeMeta of a tensor, which describes what data type it is (e.g., int, float, ...) | |

| size_t | itemsize () const |

| Return the size of a single element of this tensor in bytes. | |

| virtual int64_t | storage_offset () const |

| Return the offset in number of elements into the storage that this tensor points to. More... | |

| bool | is_empty () const |

| True if a tensor has no elements (e.g., numel() == 0). | |

| virtual void | resize_dim (int64_t ndim) |

| Change the dimensionality of a tensor. More... | |

| virtual void | set_size (int64_t dim, int64_t new_size) |

| Change the size at some dimension. More... | |

| virtual void | set_stride (int64_t dim, int64_t new_stride) |

| Change the stride at some dimension. More... | |

| virtual void | set_storage_offset (int64_t storage_offset) |

| Set the offset into the storage of this tensor. More... | |

| void | set_sizes_contiguous (IntArrayRef new_size) |

| Like set_sizes_and_strides but assumes contiguous strides. More... | |

| void | set_sizes_and_strides (IntArrayRef new_size, IntArrayRef new_stride) |

| Set the sizes and strides of a tensor. More... | |

| virtual int64_t | size (int64_t d) const |

| Return the size of a tensor at some dimension. | |

| virtual int64_t | stride (int64_t d) const |

| Return the stride of a tensor at some dimension. | |

| bool | is_variable () const |

| True if a tensor is a variable. More... | |

| virtual void | set_allow_tensor_metadata_change (bool value) |

| Set whether a tensor allows changes to its metadata (e.g. More... | |

| virtual bool | allow_tensor_metadata_change () const |

| True if a tensor allows changes to its metadata (e.g. More... | |

| void | set_autograd_meta (std::unique_ptr< c10::AutogradMetaInterface > autograd_meta) |

| Set the pointer to autograd metadata. | |

| c10::AutogradMetaInterface * | autograd_meta () const |

| Return the pointer to autograd metadata. | |

| std::unique_ptr< c10::AutogradMetaInterface > | detach_autograd_meta () |

| Detach the autograd metadata unique_ptr from this tensor, and return it. | |

| virtual c10::intrusive_ptr< TensorImpl > | shallow_copy_and_detach () const |

| DeviceType | device_type () const |

| The device type of a Tensor, e.g., DeviceType::CPU or DeviceType::CUDA. | |

| Device | GetDevice () const |

| The device of a Tensor; e.g., Device(kCUDA, 1) (the 1-index CUDA device). | |

| void | Extend (int64_t num, float growthPct) |

| Extends the outer-most dimension of this tensor by num elements, preserving the existing data. More... | |

| template<class T > | |

| void | ReserveSpace (const T &outer_dim) |

| Reserve space for the underlying tensor. More... | |

| template<typename... Ts> | |

| void | Resize (Ts...dim_source) |

| Resizes a tensor. More... | |

| void | Reshape (const std::vector< int64_t > &dims) |

| Resizes the tensor without touching underlying storage. More... | |

| void | FreeMemory () |

| Release whatever memory the tensor was holding but keep size and type information. More... | |

| void | ShareData (const TensorImpl &src) |

| Shares the data with another tensor. More... | |

| void | ShareExternalPointer (DataPtr &&data_ptr, const caffe2::TypeMeta &data_type, size_t capacity) |

| void * | raw_mutable_data (const caffe2::TypeMeta &meta) |

| Returns a mutable raw pointer of the underlying storage. More... | |

| template<typename T > | |

| T * | mutable_data () |

| Returns a typed pointer of the underlying storage. More... | |

| bool | storage_initialized () const noexcept |

| True if a tensor is storage initialized. More... | |

| bool | dtype_initialized () const noexcept |

| True if a tensor is dtype initialized. More... | |

| void | set_storage (at::Storage storage) |

Protected Member Functions | |

| void | refresh_numel () |

| Recompute the cached numel of a tensor. More... | |

| void | refresh_contiguous () |

| Recompute the cached contiguity of a tensor. More... | |

Protected Member Functions inherited from c10::intrusive_ptr_target Protected Member Functions inherited from c10::intrusive_ptr_target | |

| intrusive_ptr_target (intrusive_ptr_target &&other) noexcept | |

| intrusive_ptr_target & | operator= (intrusive_ptr_target &&other) noexcept |

| intrusive_ptr_target (const intrusive_ptr_target &other) noexcept | |

| intrusive_ptr_target & | operator= (const intrusive_ptr_target &other) noexcept |

Protected Attributes | |

| Storage | storage_ |

| std::unique_ptr< c10::AutogradMetaInterface > | autograd_meta_ = nullptr |

| SmallVector< int64_t, 5 > | sizes_ |

| SmallVector< int64_t, 5 > | strides_ |

| int64_t | storage_offset_ = 0 |

| int64_t | numel_ = 1 |

| caffe2::TypeMeta | data_type_ |

| TensorTypeId | type_id_ |

| bool | is_contiguous_ = true |

| bool | is_variable_ = false |

| bool | is_wrapped_number_ = false |

| bool | allow_tensor_metadata_change_ = true |

| bool | reserved_ = false |

The low-level representation of a tensor, which contains a pointer to a storage (which contains the actual data) and metadata (e.g., sizes and strides) describing this particular view of the data as a tensor.

Some basic characteristics about our in-memory representation of tensors:

For backwards-compatibility reasons, a tensor may be in an uninitialized state. A tensor may be uninitialized in the following two ways:

All combinations of these two uninitialized states are possible. Consider the following transcript in idiomatic Caffe2 API:

Tensor x(CPU); // x is storage-initialized, dtype-UNINITIALIZED x.Resize(4); // x is storage-UNINITIALIZED, dtype-UNINITIALIZED x.mutable_data<float>(); // x is storage-initialized, dtype-initialized x.FreeMemory(); // x is storage-UNINITIALIZED, dtype-initialized.

All other fields on tensor are always initialized. In particular, size is always valid. (Historically, a tensor declared as Tensor x(CPU) also had uninitialized size, encoded as numel == -1, but we have now decided to default to zero size, resulting in numel == 0).

Uninitialized storages MUST be uniquely owned, to keep our model simple. Thus, we will reject operations which could cause an uninitialized storage to become shared (or a shared storage to become uninitialized, e.g., from FreeMemory).

In practice, tensors which are storage-UNINITIALIZED and dtype-UNINITIALIZED are extremely ephemeral: essentially, after you do a Resize(), you basically always call mutable_data() immediately afterwards. Most functions are not designed to work if given a storage-UNINITIALIZED, dtype-UNINITIALIZED tensor.

We intend to eliminate all uninitialized states, so that every tensor is fully initialized in all fields. Please do not write new code that depends on these uninitialized states.

Definition at line 211 of file TensorImpl.h.

| c10::TensorImpl::TensorImpl | ( | TensorTypeId | type_id, |

| const caffe2::TypeMeta & | data_type, | ||

| Allocator * | allocator, | ||

| bool | is_variable | ||

| ) |

Construct a 1-dim 0-size tensor with the given settings.

The provided allocator will be used to allocate data on subsequent resize.

Definition at line 36 of file TensorImpl.cpp.

|

inlinevirtual |

True if a tensor allows changes to its metadata (e.g.

sizes / strides / storage / storage_offset).

Definition at line 821 of file TensorImpl.h.

|

inline |

Return a typed data pointer to the actual data which this tensor refers to.

This checks that the requested type (from the template parameter) matches the internal type of the tensor.

It is invalid to call data() on a dtype-uninitialized tensor, even if the size is 0.

WARNING: If a tensor is not contiguous, you MUST use strides when performing index calculations to determine the location of elements in the tensor. We recommend using 'TensorAccessor' to handle this computation for you; this class is available from 'Tensor'.

WARNING: It is NOT valid to call this method on a Variable. See Note [We regret making Variable hold a Tensor]

Definition at line 564 of file TensorImpl.h.

|

inline |

Return a void* data pointer to the actual data which this tensor refers to.

It is invalid to call data() on a dtype-uninitialized tensor, even if the size is 0.

WARNING: The data pointed to by this tensor may not contiguous; do NOT assume that itemsize() * numel() is sufficient to compute the bytes that can be validly read from this tensor.

WARNING: It is NOT valid to call this method on a Variable. See Note [We regret making Variable hold a Tensor]

Definition at line 595 of file TensorImpl.h.

|

virtual |

Return the number of dimensions of this tensor.

Note that 0-dimension represents a Tensor that is a Scalar, e.g., one that has a single element.

Reimplemented in torch::jit::ContainerTensor, at::SparseTensorImpl, and c10::UndefinedTensorImpl.

Definition at line 91 of file TensorImpl.cpp.

|

inlinenoexcept |

True if a tensor is dtype initialized.

A tensor allocated with Caffe2-style constructors is dtype uninitialized until the first time mutable_data<T>() is called.

Definition at line 1246 of file TensorImpl.h.

|

inline |

Extends the outer-most dimension of this tensor by num elements, preserving the existing data.

The underlying data may be reallocated in order to accommodate the new elements, in which case this tensors' capacity is grown at a factor of growthPct. This ensures that Extend runs on an amortized O(1) time complexity.

This op is auto-asynchronous if the underlying device (CUDA) supports it.

Definition at line 904 of file TensorImpl.h.

|

inline |

Release whatever memory the tensor was holding but keep size and type information.

Subsequent call to mutable_data will trigger new memory allocation.

Definition at line 1071 of file TensorImpl.h.

| at::Tensor & c10::TensorImpl::grad | ( | ) |

Return a mutable reference to the gradient.

This is conventionally used as t.grad() = x to set a gradient to a completely new tensor.

It is only valid to call this method on a Variable. See Note [Tensor versus Variable in C++].

Definition at line 20 of file TensorImpl.cpp.

| const at::Tensor & c10::TensorImpl::grad | ( | ) | const |

Return the accumulated gradient of a tensor.

This gradient is written into when performing backwards, when this tensor is a leaf tensor.

It is only valid to call this method on a Variable. See Note [Tensor versus Variable in C++].

Definition at line 28 of file TensorImpl.cpp.

|

virtual |

True if this tensor has storage.

See storage() for details.

Reimplemented in at::SparseTensorImpl, and c10::UndefinedTensorImpl.

Definition at line 113 of file TensorImpl.cpp.

|

inlinevirtual |

Whether or not a tensor is laid out in contiguous memory.

Tensors with non-trivial strides are not contiguous. See compute_contiguous() for the exact definition of whether or not a tensor is contiguous or not.

Reimplemented in at::SparseTensorImpl.

Definition at line 331 of file TensorImpl.h.

|

inline |

True if a tensor is a variable.

See Note [Tensor versus Variable in C++]

Definition at line 809 of file TensorImpl.h.

|

inline |

True if a tensor was auto-wrapped from a C++ or Python number.

For example, when you write 't + 2', 2 is auto-wrapped into a Tensor with is_wrapped_number_ set to true.

Wrapped numbers do not participate in the result type computation for mixed-type operations if there are any Tensors that are not wrapped numbers. This is useful, because we want 't + 2' to work with any type of tensor, not just LongTensor (which is what integers in Python represent).

Otherwise, they behave like their non-wrapped equivalents. See [Result type computation] in TensorIterator.h.

Why did we opt for wrapped numbers, as opposed to just having an extra function add(Tensor, Scalar)? This helps greatly reduce the amount of code we have to write for add, when actually a Tensor-Scalar addition is really just a Tensor-Tensor addition when the RHS is 0-dim (except for promotion behavior.)

WARNING: It is NOT valid to call this method on a Variable. See Note [We regret making Variable hold a Tensor]

Definition at line 441 of file TensorImpl.h.

|

virtual |

If condition_when_zero_dim is true, and the tensor is a 1-dim, 1-size tensor, reshape the tensor into a 0-dim tensor (scalar).

This helper function is called from generated wrapper code, to help "fix up" tensors that legacy code didn't generate in the correct shape. For example, suppose that we have a legacy function 'add' which produces a tensor which is the same shape as its inputs; however, if the inputs were zero-dimensional, it produced a 1-dim 1-size tensor (don't ask). result->maybe_zero_dim(lhs->dim() == 0 && rhs->dim() == 0) will be called, correctly resetting the dimension to 0 when when the inputs had 0-dim.

As we teach more and more of TH to handle 0-dim correctly, this function will become less necessary. At the moment, it is often called from functions that correctly handle the 0-dim case, and is just dead code in this case. In the glorious future, this function will be eliminated entirely.

Reimplemented in at::SparseTensorImpl.

Definition at line 105 of file TensorImpl.cpp.

|

inline |

Returns a typed pointer of the underlying storage.

For fundamental types, we reuse possible existing storage if there is sufficient capacity.

Definition at line 1221 of file TensorImpl.h.

|

inlinevirtual |

The number of elements in a tensor.

WARNING: Previously, if you were using the Caffe2 API, you could test numel() == -1 to see if a tensor was uninitialized. This is no longer true; numel always accurately reports the product of sizes of a tensor.

Definition at line 317 of file TensorImpl.h.

|

inline |

Returns a mutable raw pointer of the underlying storage.

Since we will need to know the type of the data for allocation, a TypeMeta object is passed in to specify the necessary information. This is conceptually equivalent of calling mutable_data<T>() where the TypeMeta parameter meta is derived from the type T. This function differs from mutable_data<T>() in the sense that the type T can be specified during runtime via the TypeMeta object.

If the existing data does not match the desired type, it will be deleted and a new storage will be created.

Definition at line 1160 of file TensorImpl.h.

|

inlineprotected |

Recompute the cached contiguity of a tensor.

Call this if you modify sizes or strides.

Definition at line 1358 of file TensorImpl.h.

|

inlineprotected |

Recompute the cached numel of a tensor.

Call this if you modify sizes.

Definition at line 1349 of file TensorImpl.h.

|

overridevirtual |

Release (decref) storage, and any other external allocations.

This override is for intrusive_ptr_target and is used to implement weak tensors.

Reimplemented from c10::intrusive_ptr_target.

Definition at line 85 of file TensorImpl.cpp.

|

inline |

True if a tensor requires gradient.

Tensors which require gradient have history tracked for any operations performed on them, so that we can automatically differentiate back to them. A tensor that requires gradient and has no history is a "leaf" tensor, which we accumulate gradients into.

It is only valid to call this method on a Variable. See Note [Tensor versus Variable in C++].

Definition at line 521 of file TensorImpl.h.

|

inline |

Reserve space for the underlying tensor.

This must be called after Resize(), since we only specify the first dimension This does not copy over the old data to the newly allocated space

Definition at line 969 of file TensorImpl.h.

|

inline |

Resizes the tensor without touching underlying storage.

This requires the total size of the tensor to remains constant.

Definition at line 1043 of file TensorImpl.h.

|

inline |

Resizes a tensor.

Resize takes in a vector of ints specifying the dimensions of the tensor. You can pass in an empty vector to specify that it is a scalar (i.e. containing one single item).

The underlying storage may be deleted after calling Resize: if the new shape leads to a different number of items in the tensor, the old memory is deleted and new memory will be allocated next time you call mutable_data(). However, if the shape is different but the total number of items is the same, the underlying storage is kept.

This method respects caffe2_keep_on_shrink. Consult the internal logic of this method to see exactly under what circumstances this flag matters.

Definition at line 1014 of file TensorImpl.h.

|

inlinevirtual |

Change the dimensionality of a tensor.

This is truly a resize: old sizes, if they are still valid, are preserved (this invariant is utilized by some call-sites, e.g., the implementation of squeeze, which mostly wants the sizes to stay the same). New dimensions are given zero size and zero stride; this is probably not what you want–you should set_size/set_stride afterwards.

TODO: This should be jettisoned in favor of set_sizes_and_strides, which is harder to misuse.

Reimplemented in at::SparseTensorImpl.

Definition at line 672 of file TensorImpl.h.

|

inlinevirtual |

Set whether a tensor allows changes to its metadata (e.g.

sizes / strides / storage / storage_offset).

Definition at line 814 of file TensorImpl.h.

|

inline |

Set whether or not a tensor requires gradient.

It is only valid to call this method on a Variable. See Note [Tensor versus Variable in C++].

Definition at line 503 of file TensorImpl.h.

|

inlinevirtual |

Change the size at some dimension.

This DOES NOT update strides; thus, most changes to size will not preserve contiguity. You probably also want to call set_stride() when you call this.

TODO: This should be jettisoned in favor of set_sizes_and_strides, which is harder to misuse.

Reimplemented in at::SparseTensorImpl.

Definition at line 688 of file TensorImpl.h.

|

inline |

Set the sizes and strides of a tensor.

WARNING: This function does not check if the requested sizes/strides are in bounds for the storage that is allocated; this is the responsibility of the caller

WARNING: It is NOT valid to call this method on a Variable. See Note [We regret making Variable hold a Tensor]

Definition at line 755 of file TensorImpl.h.

|

inline |

Like set_sizes_and_strides but assumes contiguous strides.

WARNING: This function does not check if the requested sizes/strides are in bounds for the storage that is allocated; this is the responsibility of the caller

WARNING: It is NOT valid to call this method on a Variable. See Note [We regret making Variable hold a Tensor]

Definition at line 730 of file TensorImpl.h.

|

inlinevirtual |

Set the offset into the storage of this tensor.

WARNING: This does NOT check if the tensor is in bounds for the new location at the storage; the caller is responsible for checking this (and resizing if necessary.)

Reimplemented in at::SparseTensorImpl.

Definition at line 715 of file TensorImpl.h.

|

inlinevirtual |

Change the stride at some dimension.

TODO: This should be jettisoned in favor of set_sizes_and_strides, which is harder to misuse.

Reimplemented in at::SparseTensorImpl.

Definition at line 701 of file TensorImpl.h.

|

inline |

Set whether or not a tensor was auto-wrapped from a C++ or Python number.

You probably don't want to call this, unless you are writing binding code.

WARNING: It is NOT valid to call this method on a Variable. See Note [We regret making Variable hold a Tensor]

Definition at line 454 of file TensorImpl.h.

|

inline |

Shares the data with another tensor.

To share data between two tensors, the sizes of the two tensors must be equal already. The reason we do not implicitly do a Resize to make the two tensors have the same shape is that we want to allow tensors of different shapes but the same number of items to still be able to share data. This allows one to e.g. have a n-dimensional Tensor and a flattened version sharing the same underlying storage.

The source tensor should already have its data allocated.

Definition at line 1090 of file TensorImpl.h.

|

virtual |

Return a reference to the sizes of this tensor.

This reference remains valid as long as the tensor is live and not resized.

Reimplemented in torch::jit::ContainerTensor, at::SparseTensorImpl, and c10::UndefinedTensorImpl.

Definition at line 59 of file TensorImpl.cpp.

|

inlinevirtual |

This is just like data(), except it works with Variables.

This function will go away once Variable and Tensor are merged. See Note [We regret making Variable hold a Tensor]

Definition at line 609 of file TensorImpl.h.

|

virtual |

Return the underlying storage of a Tensor.

Multiple tensors may share a single storage. A Storage is an impoverished, Tensor-like class which supports far less operations than Tensor.

Avoid using this method if possible; try to use only Tensor APIs to perform operations.

Reimplemented in torch::jit::ContainerTensor, at::SparseTensorImpl, and c10::UndefinedTensorImpl.

Definition at line 117 of file TensorImpl.cpp.

|

inlinenoexcept |

True if a tensor is storage initialized.

A tensor may become storage UNINITIALIZED after a Resize() or FreeMemory()

Definition at line 1237 of file TensorImpl.h.

|

inlinevirtual |

Return the offset in number of elements into the storage that this tensor points to.

Most tensors have storage_offset() == 0, but, for example, an index into a tensor will have a non-zero storage_offset().

WARNING: This is NOT computed in bytes.

XXX: The only thing stopping this function from being virtual is Variable.

Reimplemented in at::SparseTensorImpl, and c10::UndefinedTensorImpl.

Definition at line 650 of file TensorImpl.h.

|

virtual |

Return a reference to the strides of this tensor.

This reference remains valid as long as the tensor is live and not restrided.

Reimplemented in torch::jit::ContainerTensor, at::SparseTensorImpl, and c10::UndefinedTensorImpl.

Definition at line 63 of file TensorImpl.cpp.

|

inline |

Return the TensorTypeId corresponding to this Tensor.

In the future, this will be the sole piece of information required to dispatch to an operator; however, at the moment, it is not used for dispatch.

type_id() and type() are NOT in one-to-one correspondence; we only have a single type_id() for CPU tensors, but many Types (CPUFloatTensor, CPUDoubleTensor...)

Definition at line 274 of file TensorImpl.h.

|

inline |

Like data<T>(), but performs no checks.

You are responsible for ensuring that all invariants required by data() are upheld here.

WARNING: It is NOT valid to call this method on a Variable. See Note [We regret making Variable hold a Tensor]

Definition at line 621 of file TensorImpl.h.

1.8.11

1.8.11