|

|

| CUDARecurrentNetworkExecutor (const NetDef &step_net_def, std::map< string, string > &recurrent_input_map, std::string timestep_blob) |

| |

|

void | setMaxStreams (int n) |

| |

| void | EnsureTimestepInitialized (int t, Workspace *ws, const std::vector< std::unique_ptr< ObserverBase< OperatorBase >>> &observers_list) |

| | Callers must call EnsureTimestepInitialized before starting execution for each of the relevant timesteps. More...

|

| |

| void | SetMaxParallelTimesteps (int p) |

| | Set limit for the number of timesteps that run in parallel. More...

|

| |

|

size_t | NumObserversStepNet () |

| |

|

|

bool | Run (int T) override |

| |

|

bool | RunBackwards (int T) override |

| |

|

bool | ignoreLinkDependencies () override |

| |

| void | AnalyzeOps () override |

| |

|

| RecurrentNetworkExecutorBase (const NetDef &step_net_def, std::map< string, string > &recurrent_input_map, std::string timestep_blob) |

| |

| void | PrintInfo (int t) |

| | For debug purposes, print the dependency structure. More...

|

| |

|

|

bool | debug_ = false |

| |

|

std::vector< std::vector< RNNNetOperator > > | timestep_ops_ |

| |

|

std::vector< OperatorBase * > | op_ptrs_ |

| |

|

std::vector< RNNNetOperator > | timestep_ops_template_ |

| |

|

NetDef | step_net_def_ |

| |

|

std::vector< std::vector< string > > | op_deps_ |

| |

|

std::vector< Workspace * > | workspaces_ |

| |

|

std::map< string, string > | recurrent_input_map_ |

| |

|

std::string | timestep_blob_ |

| |

|

int | max_parallel_timesteps_ = -1 |

| |

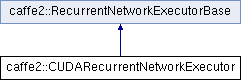

| void caffe2::CUDARecurrentNetworkExecutor::AnalyzeOps |

( |

| ) |

|

|

inlineoverrideprotectedvirtual |

Check if there is an op that only depends on ops from previous timestep, and that ops is not the last op. Then we can start computation in subsequent timesteps before the whole previous timestep has finished. If there is no parallelism, we can avoid overhead of event-based dependency management.

Reimplemented from caffe2::RecurrentNetworkExecutorBase.

Definition at line 31 of file recurrent_network_executor_gpu.h.

The documentation for this class was generated from the following files:

Public Member Functions inherited from caffe2::RecurrentNetworkExecutorBase

Public Member Functions inherited from caffe2::RecurrentNetworkExecutorBase Protected Member Functions inherited from caffe2::RecurrentNetworkExecutorBase

Protected Member Functions inherited from caffe2::RecurrentNetworkExecutorBase Data Fields inherited from caffe2::RecurrentNetworkExecutorBase

Data Fields inherited from caffe2::RecurrentNetworkExecutorBase Protected Attributes inherited from caffe2::RecurrentNetworkExecutorBase

Protected Attributes inherited from caffe2::RecurrentNetworkExecutorBase 1.8.11

1.8.11