RecurrentNetworkExecutor is a specialized runtime for recurrent neural networks (RNNs). More...

#include <recurrent_network_executor.h>

Public Member Functions | |

| virtual bool | Run (int T)=0 |

| virtual bool | RunBackwards (int T)=0 |

| void | EnsureTimestepInitialized (int t, Workspace *ws, const std::vector< std::unique_ptr< ObserverBase< OperatorBase >>> &observers_list) |

| Callers must call EnsureTimestepInitialized before starting execution for each of the relevant timesteps. More... | |

| void | SetMaxParallelTimesteps (int p) |

| Set limit for the number of timesteps that run in parallel. More... | |

| size_t | NumObserversStepNet () |

Data Fields | |

| bool | debug_ = false |

Protected Member Functions | |

| RecurrentNetworkExecutorBase (const NetDef &step_net_def, std::map< string, string > &recurrent_input_map, std::string timestep_blob) | |

| void | PrintInfo (int t) |

| For debug purposes, print the dependency structure. More... | |

| virtual void | AnalyzeOps () |

| virtual bool | ignoreLinkDependencies ()=0 |

Protected Attributes | |

| std::vector< std::vector< RNNNetOperator > > | timestep_ops_ |

| std::vector< OperatorBase * > | op_ptrs_ |

| std::vector< RNNNetOperator > | timestep_ops_template_ |

| NetDef | step_net_def_ |

| std::vector< std::vector< string > > | op_deps_ |

| std::vector< Workspace * > | workspaces_ |

| std::map< string, string > | recurrent_input_map_ |

| std::string | timestep_blob_ |

| int | max_parallel_timesteps_ = -1 |

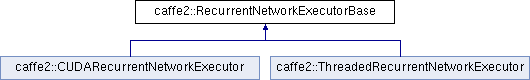

RecurrentNetworkExecutor is a specialized runtime for recurrent neural networks (RNNs).

It is invoked from the RecurrentNetworkOp and RecurrentNetworkGradientOp.

Its main benefit over running each RNN timestep as a separate net is that it can run ops in subsequent timesteps in parallel when possible. For example, multi-layer LSTMs allow for timestep parallelism because next timestep's lower layer can start executing at the same time as the same timestep's upper layer.

There are two implementations of the RNN executor: one for CPUs (ThreadedRecurrentNetworkExecutor) and another for GPUs (CUDARecurrentNetworkExecutor).

Definition at line 31 of file recurrent_network_executor.h.

|

inline |

Callers must call EnsureTimestepInitialized before starting execution for each of the relevant timesteps.

If timestep was initialized before, this is a no-op. First time this is called the dependencies of the operators in timestep are analyzed, and that incurs higher overhead than subsequent calls.

Definition at line 74 of file recurrent_network_executor.h.

|

inlineprotected |

For debug purposes, print the dependency structure.

Set rnn_executor_debug=1 in the RecurrentNetworkOp to enable.

Definition at line 418 of file recurrent_network_executor.h.

|

inline |

Set limit for the number of timesteps that run in parallel.

Useful for forward-only execution when we rotate workspaces over timesteps, i.e when timestep[t] and timestep[t + p] have same workspace.

Definition at line 185 of file recurrent_network_executor.h.

1.8.11

1.8.11