|

| def | __init__ (self, alpha=0.01, epsilon=1e-4, decay=0.95, policy="fixed", sparse_dedup_aggregator=None, engine='', kwargs) |

| |

|

def | scale_learning_rate (self, scale) |

| |

|

def | __init__ (self) |

| |

|

def | __call__ (self, net, param_init_net, param, grad=None) |

| |

|

def | get_cpu_blob_name (self, base_str, node_name='') |

| |

|

def | get_gpu_blob_name (self, base_str, gpu_id, node_name) |

| |

| def | make_unique_blob_name (self, base_str) |

| |

|

def | build_lr (self, net, param_init_net, base_learning_rate, learning_rate_blob=None, policy="fixed", iter_val=0, kwargs) |

| |

| def | add_lr_multiplier (self, lr_multiplier) |

| |

| def | get_auxiliary_parameters (self) |

| |

|

def | scale_learning_rate (self, args, kwargs) |

| |

|

def | create_lars_inputs (self, param_init_net, weight_decay, trust, lr_max) |

| |

|

|

| alpha |

| |

|

| epsilon |

| |

|

| decay |

| |

|

| policy |

| |

|

| sparse_dedup_aggregator |

| |

|

| engine |

| |

|

| init_kwargs |

| |

|

|

def | dedup (net, sparse_dedup_aggregator, grad) |

| |

Definition at line 738 of file optimizer.py.

| def caffe2.python.optimizer.AdadeltaOptimizer.__init__ |

( |

|

self, |

|

|

|

alpha = 0.01, |

|

|

|

epsilon = 1e-4, |

|

|

|

decay = 0.95, |

|

|

|

policy = "fixed", |

|

|

|

sparse_dedup_aggregator = None, |

|

|

|

engine = '', |

|

|

|

kwargs |

|

) |

| |

Constructor function to add Adadelta Optimizer

Args:

alpha: learning rate

epsilon: attribute of Adadelta to avoid numerical issues

decay: attribute of Adadelta to decay the squared gradient sum

policy: specifies how learning rate should be applied, options are

"fixed", "step", "exp", etc.

sparse_dedup_aggregator: specifies deduplication strategy for

gradient slices. Works while using sparse gradients. Options

include "mean" and "sum".

engine: the engine used, options include "", "CUDNN", etc.

Definition at line 740 of file optimizer.py.

The documentation for this class was generated from the following file:

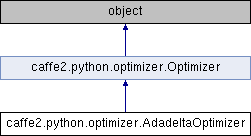

Public Member Functions inherited from caffe2.python.optimizer.Optimizer

Public Member Functions inherited from caffe2.python.optimizer.Optimizer Static Public Member Functions inherited from caffe2.python.optimizer.Optimizer

Static Public Member Functions inherited from caffe2.python.optimizer.Optimizer 1.8.11

1.8.11