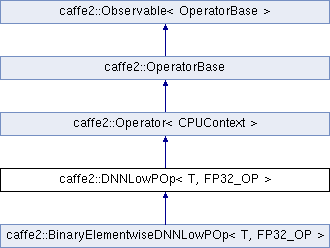

A convenient base class for C2 operators with DNNLOWP engine. More...

#include <dnnlowp_op.h>

Public Member Functions | |

| USE_OPERATOR_FUNCTIONS (CPUContext) | |

| DNNLowPOp (const OperatorDef &operator_def, Workspace *ws) | |

Public Member Functions inherited from caffe2::Operator< CPUContext > Public Member Functions inherited from caffe2::Operator< CPUContext > | |

| Operator (const OperatorDef &operator_def, Workspace *ws) | |

| Operator (const c10::FunctionSchema &fn_schema, std::vector< c10::IValue > inputs, std::vector< at::Tensor > outputs) | |

| const Tensor & | Input (int idx, DeviceType type=CPUContext::GetDeviceType()) |

| Retrieve a non-owning reference to the input at position 'idx' for this operator. More... | |

| Tensor | XOutput (int idx, at::IntArrayRef dims, at::TensorOptions options) |

| XOutput is a modernized version of Output which returns a Tensor rather than a Tensor* (the raw pointer in the latter case is useless, as Tensor is a pointer type.) | |

Public Member Functions inherited from caffe2::OperatorBase Public Member Functions inherited from caffe2::OperatorBase | |

| OperatorBase (const OperatorDef &operator_def, Workspace *ws) | |

| OperatorBase (const c10::FunctionSchema &schema, std::vector< c10::IValue > inputs, std::vector< at::Tensor > outputs) | |

| bool | isLegacyOperator () const |

| Return true if the operator was instantiated with OperatorDef New operators should be instantiated with FunctionSchema. | |

| const c10::FunctionSchema & | getFunctionSchema () const |

| bool | HasArgument (const string &name) const |

| Checks if the operator has an argument of the given name. | |

| template<typename T > | |

| T | GetSingleArgument (const string &name, const T &default_value) const |

| template<typename T > | |

| bool | HasSingleArgumentOfType (const string &name) const |

| template<typename T > | |

| vector< T > | GetVectorFromIValueList (const c10::IValue &value) const |

| template<typename T > | |

| vector< T > | GetRepeatedArgument (const string &name, const vector< T > &default_value={}) const |

| template<typename T > | |

| const T & | Input (int idx) |

| template<typename T > | |

| const T & | Input (int idx, DeviceType type) |

| template<typename T > | |

| T * | Output (int idx) |

| template<typename T > | |

| T * | Output (int idx, DeviceType type) |

| Tensor | XOutputTensor (int idx, at::IntArrayRef dims, at::TensorOptions options) |

| void | SetOutputTensor (int idx, Tensor tensor) |

| Tensor | OutputTensorOrUndefined (int idx) |

| Tensor * | OutputTensor (int idx, at::IntArrayRef dims, at::TensorOptions options) |

| Tensor * | OutputTensorCopyFrom (int idx, at::TensorOptions options, const Tensor &src, bool async=false) |

| Tensor * | OutputTensorAlias (int idx, const Tensor &src) |

| template<typename T > | |

| T * | Output (int idx, T *allocated) |

| const Blob & | InputBlob (int idx) |

| Blob * | OutputBlob (int idx) |

| bool | IsInputOutputAlias (int i, int j) |

| template<typename T > | |

| bool | InputIsType (int idx) |

| bool | InputIsTensorType (int idx, DeviceType device_type) |

| template<typename T > | |

| bool | OutputIsType (int idx) |

| bool | OutputIsTensorType (int idx, DeviceType type) |

| int | InputSize () const |

| int | OutputSize () const |

| const vector< const Blob * > & | Inputs () const |

| const vector< Blob * > & | Outputs () |

| vector< TensorShape > | InputTensorShapes () const |

| virtual void | WaitEvent (const Event &ev, int=-1) |

| void | Wait (const OperatorBase &other, int stream_id=-1) |

| virtual void | WaitEvents (const std::vector< const Event * > &events, int=-1) |

| virtual void | Finish () |

| virtual bool | Run (int=0) |

| virtual bool | HasAsyncPart () const |

| virtual bool | SupportsAsyncScheduling () const |

| virtual bool | RunAsync (int stream_id=0) |

| virtual void | AddRelatedBlobInfo (EnforceNotMet *err) |

| const OperatorDef & | debug_def () const |

| void | set_debug_def (const std::shared_ptr< const OperatorDef > &operator_def) |

| bool | has_debug_def () const |

| void | RecordLastFailedOpNetPosition () |

| int | net_position () const |

| void | set_net_position (int idx) |

| const DeviceOption & | device_option () const |

| const Event & | event () const |

| Event & | event () |

| void | ResetEvent () |

| void | DisableEvent () |

| bool | IsEventDisabled () const |

| virtual void | SyncDeviceBarrierForObservers () |

| virtual bool | IsStreamFree (int) const |

| const std::string & | type () const |

| void | annotate_engine (const std::string &engine) |

| const std::string & | engine () const |

| void | SetExecutorHelper (ExecutorHelper *helper) |

| ExecutorHelper * | GetExecutorHelper () const |

| std::vector< at::Tensor > | move_newstyle_outputs ()&& |

| template<> | |

| NetDef | GetSingleArgument (const std::string &name, const NetDef &default_value) const |

| template<> | |

| vector< int > | GetVectorFromIValueList (const c10::IValue &value) const |

| template<> | |

| vector< float > | GetVectorFromIValueList (const c10::IValue &value) const |

| template<> | |

| vector< string > | GetVectorFromIValueList (const c10::IValue &value) const |

Public Member Functions inherited from caffe2::Observable< OperatorBase > Public Member Functions inherited from caffe2::Observable< OperatorBase > | |

| Observable (Observable &&)=default | |

| Observable & | operator= (Observable &&)=default |

| C10_DISABLE_COPY_AND_ASSIGN (Observable) | |

| const Observer * | AttachObserver (std::unique_ptr< Observer > observer) |

| std::unique_ptr< Observer > | DetachObserver (const Observer *observer_ptr) |

| Returns a unique_ptr to the removed observer. More... | |

| virtual size_t | NumObservers () |

| void | StartAllObservers () |

| void | StopAllObservers () |

Protected Member Functions | |

| const TensorCPU & | InputTensorCPU_ (int idx) |

| TensorCPU * | OutputTensorCPU_ (int idx) |

| Tensor * | OutputTensorCPU_ (int idx, at::IntList dims, at::TensorOptions options) |

| T * | GetQuantizedOutputData_ () |

| void | MeasureQuantizationError_ () |

| void | RunOnDeviceEpilogue_ () |

| void | ParseDNNLowPOperatorArguments_ () |

| void | GetOutputQuantizationParams_ () |

| OpWrapper< FP32_OP, T > * | Fp32Op_ () |

Protected Member Functions inherited from caffe2::OperatorBase Protected Member Functions inherited from caffe2::OperatorBase | |

| virtual void | RecordEvent (const char *=nullptr) |

| void | SetEventFinished (const char *err_msg=nullptr) |

| void | SetEventFinishedWithException (const char *err_msg=nullptr) |

| std::string | getErrorMsg () |

| C10_DISABLE_COPY_AND_ASSIGN (OperatorBase) | |

Protected Attributes | |

| bool | dequantize_output_ {false} |

| bool | measure_quantization_error_ {false} |

| std::string | followed_by_ |

| std::vector< dnnlowp::TensorQuantizationParams > | in_qparams_ |

| dnnlowp::TensorQuantizationParams | out_qparams_ |

| std::unique_ptr< OpWrapper< FP32_OP, T > > | fp32_op_ |

| std::unique_ptr< dnnlowp::QuantizationFactory > | qfactory_ |

| std::vector< T > | out_temp_ |

| dnnlowp::QuantizationErrorStats | quantization_error_stats_ |

| bool | arguments_parsed_ {false} |

Protected Attributes inherited from caffe2::OperatorBase Protected Attributes inherited from caffe2::OperatorBase | |

| std::unique_ptr< Event > | event_ |

Protected Attributes inherited from caffe2::Observable< OperatorBase > Protected Attributes inherited from caffe2::Observable< OperatorBase > | |

| std::vector< std::unique_ptr< Observer > > | observers_list_ |

Additional Inherited Members | |

Public Types inherited from caffe2::Observable< OperatorBase > Public Types inherited from caffe2::Observable< OperatorBase > | |

| using | Observer = ObserverBase< OperatorBase > |

Static Public Attributes inherited from caffe2::OperatorBase Static Public Attributes inherited from caffe2::OperatorBase | |

| static const int | kNoNetPositionSet = -1 |

A convenient base class for C2 operators with DNNLOWP engine.

DNNLOWP ops give flexibility on the type of input/output blobs. For example, some inputs can be the usual fp32 tensor and they will be quantized before doing actual computation. Otherwise, the inputs should be pre-quantized Int8TensorCPU. A few constraints: when the weight is pre-quantized if and only if the bias is also pre-quantized.

static quantization vs. dynamic quantization When Y_scale and Y_zero_point (optional with default = 0) arg is set, and dequantize_output is false, we do static quantization, meaning we're using the same pre-computed scale and zero_point for the output activation tensor. Otherwise, we do dynamic quantization by looking at the min/max of output activation tensor for each batch. Y_scale and Y_zero_point arguments are used for static quantization. scale and zero_point of Int8TensorCPU is used for carrying quantization information across operators both in static and dynamic quantization. This means scale and zero_point of Int8TensorCPU is valid only for the current batch and will be reset in the next batch when dynamic quantization is used.

C2 operators with DNNLOWP engine have the following arguments:

For the following quantization method related options, please refer to caffe2/quantization/server/dnnlowp.cc for more details.

Definition at line 77 of file dnnlowp_op.h.

1.8.11

1.8.11