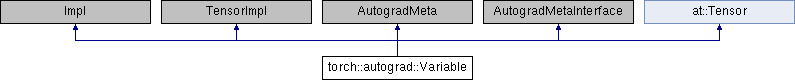

A Variable augments a Tensor with the ability to interact in our autograd machinery.

More...

#include <variable.h>

Public Member Functions | |

| Variable ()=default | |

| Default constructor. | |

| Variable (at::Tensor const &rhs) | |

| Variable (at::Tensor &&rhs) | |

| const at::Tensor & | data () const noexcept |

| at::Tensor & | data () noexcept |

| const std::shared_ptr< Function > & | grad_fn () const |

Gets the gradient function of the Variable. More... | |

| Function * | grad_fn_unsafe () const |

| Gets the raw gradient function pointer, whatever it currently is. | |

| void | set_grad_accumulator (std::weak_ptr< Function > grad_accumulator) |

Set the gradient accumulator of the Variable. More... | |

| std::shared_ptr< Function > | try_get_grad_accumulator () const |

Attempts to get a pointer to the gradient accumulator of the Variable, if it still exists. More... | |

| std::shared_ptr< Function > | grad_accumulator () const |

Gets the gradient accumulator of the Variable if it has one, or else create one on the fly and return it. More... | |

| Edge | gradient_edge () const |

Returns the "canonical" gradient edge of this Variable, i.e. More... | |

| Variable | detach () const |

Returns a copy of this Variable that is detached from its autograd graph and has a blank version. More... | |

| void | detach_ () |

Like detach(), but removes this Variable in-place. More... | |

| void | backward (c10::optional< Tensor > gradient, bool keep_graph, bool create_graph) const |

| Computes the gradient of current tensor w.r.t. graph leaves. | |

| void | set_data (const at::Tensor &new_data) |

Sets the Tensor held by this Variable to the one supplied. More... | |

| void | set_gradient_edge (Edge edge) noexcept |

| Set the gradient edge – i.e. More... | |

| uint32_t | output_nr () const noexcept |

Returns the input index of the gradient Function to which this Variable is connected. More... | |

| bool | is_leaf () const noexcept |

True if this Variable is a leaf and thus does not have a grad_fn. | |

| void | bump_version () noexcept |

Increments the version count of this Variable. | |

| void | set_version_counter (const VariableVersion &version_counter) noexcept |

| const VariableVersion & | version_counter () const noexcept |

Retrieves this Variables version counter. | |

| uint32_t | current_version () const noexcept |

Retrieves the current value of the Variable's version counter. More... | |

| void | rebase_history (Edge gradient_edge) |

Update the grad_fn of an existing Variable. More... | |

| void | add_hook (std::shared_ptr< FunctionPreHook > hook) |

| const std::vector< std::shared_ptr< FunctionPreHook > > & | hooks () const noexcept |

| void | clear_hooks () |

| bool | is_view () const noexcept |

Returns true if this Variable is a view of another Variable. | |

| const Variable & | base () const |

Returns the Variable that this Variable is a view of. More... | |

| void | set_name (const std::string &name) |

| const std::string & | name () const noexcept |

| PyObject * | pyobj () const noexcept |

| void | set_pyobj (PyObject *pyobj) noexcept |

| Variable::AutogradMeta * | get_autograd_meta () const noexcept |

| void | set_requires_grad (bool requires_grad, at::TensorImpl *self_impl) override |

Sets the requires_grad property of Variable. More... | |

| bool | requires_grad () const override |

| Variable & | grad () override |

Accesses the gradient Variable of this Variable. | |

| const Variable & | grad () const override |

| bool | requires_grad () const override |

| Impl (at::Tensor data, std::unique_ptr< Variable::AutogradMeta > autograd_meta, bool requires_grad=false, Edge gradient_edge=Edge()) | |

| int64_t | numel () const override |

| at::IntArrayRef | sizes () const override |

| at::IntArrayRef | strides () const override |

| bool | is_contiguous () const override |

| int64_t | size (int64_t d) const override |

| int64_t | stride (int64_t d) const override |

| void | resize_dim (int64_t ndim) override |

| void | set_size (int64_t dim, int64_t new_size) override |

| void | set_stride (int64_t dim, int64_t new_stride) override |

| void | set_storage_offset (int64_t storage_offset) override |

| int64_t | dim () const override |

| bool | has_storage () const override |

| const at::Storage & | storage () const override |

| void * | slow_data () const override |

| void | set_data (const at::Tensor &new_data) |

| void | release_resources () override |

| Reset all expensive fields to free up resources. | |

| Variable::AutogradMeta * | get_autograd_meta () const |

| int64_t | storage_offset () const override |

| DifferentiableViewImpl (Variable base, at::Tensor data, Edge gradient_edge, std::unique_ptr< Variable::DifferentiableViewMeta > autograd_meta) | |

| void | release_resources () override |

| Reset all expensive fields to free up resources. | |

Public Member Functions inherited from at::Tensor Public Member Functions inherited from at::Tensor | |

| Tensor (c10::intrusive_ptr< TensorImpl, UndefinedTensorImpl > tensor_impl) | |

| Tensor (const Tensor &)=default | |

| Tensor (Tensor &&)=default | |

| Tensor (C10Tensor tensor) | |

| operator C10Tensor () const & | |

| operator C10Tensor ()&& | |

| int64_t | dim () const |

| int64_t | storage_offset () const |

| TensorImpl * | unsafeGetTensorImpl () const |

| TensorImpl * | unsafeReleaseTensorImpl () |

| const c10::intrusive_ptr< TensorImpl, UndefinedTensorImpl > & | getIntrusivePtr () const |

| bool | defined () const |

| void | reset () |

| Tensor & | operator= (const Tensor &x)& |

| Tensor & | operator= (Tensor &&x)& |

| Tensor & | operator= (Scalar v)&& |

| Tensor & | operator= (const Tensor &)&& |

| Tensor & | operator= (Tensor &&)&& |

| bool | is_same (const Tensor &other) const noexcept |

| size_t | use_count () const noexcept |

| size_t | weak_use_count () const noexcept |

| const char * | toString () const |

| IntArrayRef | sizes () const |

| IntArrayRef | strides () const |

| int64_t | ndimension () const |

| bool | is_contiguous () const |

| size_t | nbytes () const |

| size_t | itemsize () const |

| size_t | element_size () const |

| Type & | type () const |

| TensorTypeId | type_id () const |

| ScalarType | scalar_type () const |

| bool | has_storage () const |

| const Storage & | storage () const |

| bool | is_alias_of (const at::Tensor &other) const |

| Tensor | toType (const Type &t, bool non_blocking=false) const |

| Tensor & | copy_ (const Tensor &src, bool non_blocking=false) |

| Tensor | toType (ScalarType t) const |

| Tensor | toBackend (Backend b) const |

| bool | is_variable () const noexcept |

Returns true if the Tensor is actually a torch::autograd::Variable. More... | |

| Layout | layout () const noexcept |

Returns a Tensor's layout. Defined in Type.h. | |

| caffe2::TypeMeta | dtype () const noexcept |

Returns a Tensor's dtype (TypeMeta). Defined in TensorMethods.h. | |

| Device | device () const |

Returns a Tensor's device. | |

| int64_t | get_device () const |

Returns a Tensor's device index. | |

| bool | is_cuda () const |

Returns if a Tensor has CUDA backend. | |

| bool | is_hip () const |

Returns if a Tensor has HIP backend. | |

| bool | is_sparse () const |

Returns if a Tensor has sparse backend. | |

| TensorOptions | options () const |

Returns the TensorOptions corresponding to this Tensor. More... | |

| template<typename T > | |

| T * | data () const |

| template<typename T > | |

| T | item () const |

| void | print () const |

| template<typename T , size_t N> | |

| TensorAccessor< T, N > | accessor () const & |

| template<typename T , size_t N> | |

| TensorAccessor< T, N > | accessor ()&&=delete |

| template<typename T , size_t N, template< typename U > class PtrTraits = DefaultPtrTraits, typename index_t = int64_t> | |

| PackedTensorAccessor< T, N, PtrTraits, index_t > | packed_accessor () const & |

| template<typename T , size_t N, template< typename U > class PtrTraits = DefaultPtrTraits, typename index_t = int64_t> | |

| PackedTensorAccessor< T, N > | packed_accessor ()&&=delete |

| Tensor | operator- () const |

| Tensor & | operator+= (const Tensor &other) |

| Tensor & | operator+= (Scalar other) |

| Tensor & | operator-= (const Tensor &other) |

| Tensor & | operator-= (Scalar other) |

| Tensor & | operator*= (const Tensor &other) |

| Tensor & | operator*= (Scalar other) |

| Tensor & | operator/= (const Tensor &other) |

| Tensor & | operator/= (Scalar other) |

| Tensor | operator[] (Scalar index) const |

| Tensor | operator[] (Tensor index) const |

| Tensor | operator[] (int64_t index) const |

| Tensor | cpu () const |

| Tensor | cuda () const |

| Tensor | hip () const |

| Tensor & | set_requires_grad (bool requires_grad) |

| bool | requires_grad () const |

| Tensor & | grad () |

| const Tensor & | grad () const |

| void | set_data (Tensor new_data) |

| void | backward (c10::optional< Tensor > gradient=c10::nullopt, bool keep_graph=false, bool create_graph=false) |

| Computes the gradient of current tensor w.r.t. graph leaves. | |

| Tensor | abs () const |

| Tensor & | abs_ () |

| Tensor | acos () const |

| Tensor & | acos_ () |

| Tensor | add (const Tensor &other, Scalar alpha=1) const |

| Tensor & | add_ (const Tensor &other, Scalar alpha=1) |

| Tensor | add (Scalar other, Scalar alpha=1) const |

| Tensor & | add_ (Scalar other, Scalar alpha=1) |

| Tensor | addmv (const Tensor &mat, const Tensor &vec, Scalar beta=1, Scalar alpha=1) const |

| Tensor & | addmv_ (const Tensor &mat, const Tensor &vec, Scalar beta=1, Scalar alpha=1) |

| Tensor | addr (const Tensor &vec1, const Tensor &vec2, Scalar beta=1, Scalar alpha=1) const |

| Tensor & | addr_ (const Tensor &vec1, const Tensor &vec2, Scalar beta=1, Scalar alpha=1) |

| Tensor | all (int64_t dim, bool keepdim=false) const |

| bool | allclose (const Tensor &other, double rtol=1e-05, double atol=1e-08, bool equal_nan=false) const |

| Tensor | any (int64_t dim, bool keepdim=false) const |

| Tensor | argmax (c10::optional< int64_t > dim=c10::nullopt, bool keepdim=false) const |

| Tensor | argmin (c10::optional< int64_t > dim=c10::nullopt, bool keepdim=false) const |

| Tensor | as_strided (IntArrayRef size, IntArrayRef stride, c10::optional< int64_t > storage_offset=c10::nullopt) const |

| Tensor & | as_strided_ (IntArrayRef size, IntArrayRef stride, c10::optional< int64_t > storage_offset=c10::nullopt) |

| Tensor | asin () const |

| Tensor & | asin_ () |

| Tensor | atan () const |

| Tensor & | atan_ () |

| Tensor | baddbmm (const Tensor &batch1, const Tensor &batch2, Scalar beta=1, Scalar alpha=1) const |

| Tensor & | baddbmm_ (const Tensor &batch1, const Tensor &batch2, Scalar beta=1, Scalar alpha=1) |

| Tensor | bernoulli (Generator *generator=nullptr) const |

| Tensor & | bernoulli_ (const Tensor &p, Generator *generator=nullptr) |

| Tensor & | bernoulli_ (double p=0.5, Generator *generator=nullptr) |

| Tensor | bernoulli (double p, Generator *generator=nullptr) const |

| Tensor | bincount (const Tensor &weights={}, int64_t minlength=0) const |

| Tensor | bmm (const Tensor &mat2) const |

| Tensor | ceil () const |

| Tensor & | ceil_ () |

| std::vector< Tensor > | chunk (int64_t chunks, int64_t dim=0) const |

| Tensor | clamp (c10::optional< Scalar > min=c10::nullopt, c10::optional< Scalar > max=c10::nullopt) const |

| Tensor & | clamp_ (c10::optional< Scalar > min=c10::nullopt, c10::optional< Scalar > max=c10::nullopt) |

| Tensor | clamp_max (Scalar max) const |

| Tensor & | clamp_max_ (Scalar max) |

| Tensor | clamp_min (Scalar min) const |

| Tensor & | clamp_min_ (Scalar min) |

| Tensor | contiguous () const |

| Tensor | cos () const |

| Tensor & | cos_ () |

| Tensor | cosh () const |

| Tensor & | cosh_ () |

| Tensor | cumsum (int64_t dim, ScalarType dtype) const |

| Tensor | cumsum (int64_t dim) const |

| Tensor | cumprod (int64_t dim, ScalarType dtype) const |

| Tensor | cumprod (int64_t dim) const |

| Tensor | det () const |

| Tensor | diag_embed (int64_t offset=0, int64_t dim1=-2, int64_t dim2=-1) const |

| Tensor | diagflat (int64_t offset=0) const |

| Tensor | diagonal (int64_t offset=0, int64_t dim1=0, int64_t dim2=1) const |

| Tensor | div (const Tensor &other) const |

| Tensor & | div_ (const Tensor &other) |

| Tensor | div (Scalar other) const |

| Tensor & | div_ (Scalar other) |

| Tensor | dot (const Tensor &tensor) const |

| Tensor & | resize_ (IntArrayRef size) |

| Tensor | erf () const |

| Tensor & | erf_ () |

| Tensor | erfc () const |

| Tensor & | erfc_ () |

| Tensor | exp () const |

| Tensor & | exp_ () |

| Tensor | expm1 () const |

| Tensor & | expm1_ () |

| Tensor | expand (IntArrayRef size, bool implicit=false) const |

| Tensor | expand_as (const Tensor &other) const |

| Tensor | flatten (int64_t start_dim=0, int64_t end_dim=-1) const |

| Tensor & | fill_ (Scalar value) |

| Tensor & | fill_ (const Tensor &value) |

| Tensor | floor () const |

| Tensor & | floor_ () |

| Tensor | ger (const Tensor &vec2) const |

| Tensor | fft (int64_t signal_ndim, bool normalized=false) const |

| Tensor | ifft (int64_t signal_ndim, bool normalized=false) const |

| Tensor | rfft (int64_t signal_ndim, bool normalized=false, bool onesided=true) const |

| Tensor | irfft (int64_t signal_ndim, bool normalized=false, bool onesided=true, IntArrayRef signal_sizes={}) const |

| Tensor | index (TensorList indices) const |

| Tensor & | index_copy_ (int64_t dim, const Tensor &index, const Tensor &source) |

| Tensor | index_copy (int64_t dim, const Tensor &index, const Tensor &source) const |

| Tensor & | index_put_ (TensorList indices, const Tensor &values, bool accumulate=false) |

| Tensor | index_put (TensorList indices, const Tensor &values, bool accumulate=false) const |

| Tensor | inverse () const |

| Tensor | isclose (const Tensor &other, double rtol=1e-05, double atol=1e-08, bool equal_nan=false) const |

| bool | is_distributed () const |

| bool | is_floating_point () const |

| bool | is_complex () const |

| bool | is_nonzero () const |

| bool | is_same_size (const Tensor &other) const |

| bool | is_signed () const |

| std::tuple< Tensor, Tensor > | kthvalue (int64_t k, int64_t dim=-1, bool keepdim=false) const |

| Tensor | log () const |

| Tensor & | log_ () |

| Tensor | log10 () const |

| Tensor & | log10_ () |

| Tensor | log1p () const |

| Tensor & | log1p_ () |

| Tensor | log2 () const |

| Tensor & | log2_ () |

| Tensor | logdet () const |

| Tensor | log_softmax (int64_t dim, ScalarType dtype) const |

| Tensor | log_softmax (int64_t dim) const |

| Tensor | logsumexp (IntArrayRef dim, bool keepdim=false) const |

| Tensor | matmul (const Tensor &other) const |

| Tensor | matrix_power (int64_t n) const |

| std::tuple< Tensor, Tensor > | max (int64_t dim, bool keepdim=false) const |

| Tensor | max_values (IntArrayRef dim, bool keepdim=false) const |

| Tensor | mean (ScalarType dtype) const |

| Tensor | mean () const |

| Tensor | mean (IntArrayRef dim, bool keepdim, ScalarType dtype) const |

| Tensor | mean (IntArrayRef dim, bool keepdim=false) const |

| Tensor | mean (IntArrayRef dim, ScalarType dtype) const |

| std::tuple< Tensor, Tensor > | median (int64_t dim, bool keepdim=false) const |

| std::tuple< Tensor, Tensor > | min (int64_t dim, bool keepdim=false) const |

| Tensor | min_values (IntArrayRef dim, bool keepdim=false) const |

| Tensor | mm (const Tensor &mat2) const |

| std::tuple< Tensor, Tensor > | mode (int64_t dim=-1, bool keepdim=false) const |

| Tensor | mul (const Tensor &other) const |

| Tensor & | mul_ (const Tensor &other) |

| Tensor | mul (Scalar other) const |

| Tensor & | mul_ (Scalar other) |

| Tensor | mv (const Tensor &vec) const |

| Tensor | mvlgamma (int64_t p) const |

| Tensor & | mvlgamma_ (int64_t p) |

| Tensor | narrow_copy (int64_t dim, int64_t start, int64_t length) const |

| Tensor | narrow (int64_t dim, int64_t start, int64_t length) const |

| Tensor | permute (IntArrayRef dims) const |

| Tensor | pin_memory () const |

| Tensor | pinverse (double rcond=1e-15) const |

| Tensor | repeat (IntArrayRef repeats) const |

| Tensor | reshape (IntArrayRef shape) const |

| Tensor | reshape_as (const Tensor &other) const |

| Tensor | round () const |

| Tensor & | round_ () |

| Tensor | relu () const |

| Tensor & | relu_ () |

| Tensor | prelu (const Tensor &weight) const |

| std::tuple< Tensor, Tensor > | prelu_backward (const Tensor &grad_output, const Tensor &weight) const |

| Tensor | hardshrink (Scalar lambd=0.5) const |

| Tensor | hardshrink_backward (const Tensor &grad_out, Scalar lambd) const |

| Tensor | rsqrt () const |

| Tensor & | rsqrt_ () |

| Tensor | select (int64_t dim, int64_t index) const |

| Tensor | sigmoid () const |

| Tensor & | sigmoid_ () |

| Tensor | sin () const |

| Tensor & | sin_ () |

| Tensor | sinh () const |

| Tensor & | sinh_ () |

| Tensor | detach () const |

| Tensor & | detach_ () |

| int64_t | size (int64_t dim) const |

| Tensor | slice (int64_t dim=0, int64_t start=0, int64_t end=9223372036854775807, int64_t step=1) const |

| std::tuple< Tensor, Tensor > | slogdet () const |

| Tensor | smm (const Tensor &mat2) const |

| Tensor | softmax (int64_t dim, ScalarType dtype) const |

| Tensor | softmax (int64_t dim) const |

| std::vector< Tensor > | split (int64_t split_size, int64_t dim=0) const |

| std::vector< Tensor > | split_with_sizes (IntArrayRef split_sizes, int64_t dim=0) const |

| Tensor | squeeze () const |

| Tensor | squeeze (int64_t dim) const |

| Tensor & | squeeze_ () |

| Tensor & | squeeze_ (int64_t dim) |

| Tensor | sspaddmm (const Tensor &mat1, const Tensor &mat2, Scalar beta=1, Scalar alpha=1) const |

| Tensor | stft (int64_t n_fft, c10::optional< int64_t > hop_length=c10::nullopt, c10::optional< int64_t > win_length=c10::nullopt, const Tensor &window={}, bool normalized=false, bool onesided=true) const |

| int64_t | stride (int64_t dim) const |

| Tensor | sum (ScalarType dtype) const |

| Tensor | sum () const |

| Tensor | sum (IntArrayRef dim, bool keepdim, ScalarType dtype) const |

| Tensor | sum (IntArrayRef dim, bool keepdim=false) const |

| Tensor | sum (IntArrayRef dim, ScalarType dtype) const |

| Tensor | sum_to_size (IntArrayRef size) const |

| Tensor | sqrt () const |

| Tensor & | sqrt_ () |

| Tensor | std (bool unbiased=true) const |

| Tensor | std (IntArrayRef dim, bool unbiased=true, bool keepdim=false) const |

| Tensor | prod (ScalarType dtype) const |

| Tensor | prod () const |

| Tensor | prod (int64_t dim, bool keepdim, ScalarType dtype) const |

| Tensor | prod (int64_t dim, bool keepdim=false) const |

| Tensor | prod (int64_t dim, ScalarType dtype) const |

| Tensor | t () const |

| Tensor & | t_ () |

| Tensor | tan () const |

| Tensor & | tan_ () |

| Tensor | tanh () const |

| Tensor & | tanh_ () |

| Tensor | transpose (int64_t dim0, int64_t dim1) const |

| Tensor & | transpose_ (int64_t dim0, int64_t dim1) |

| Tensor | flip (IntArrayRef dims) const |

| Tensor | roll (IntArrayRef shifts, IntArrayRef dims={}) const |

| Tensor | rot90 (int64_t k=1, IntArrayRef dims={0, 1}) const |

| Tensor | trunc () const |

| Tensor & | trunc_ () |

| Tensor | type_as (const Tensor &other) const |

| Tensor | unsqueeze (int64_t dim) const |

| Tensor & | unsqueeze_ (int64_t dim) |

| Tensor | var (bool unbiased=true) const |

| Tensor | var (IntArrayRef dim, bool unbiased=true, bool keepdim=false) const |

| Tensor | view_as (const Tensor &other) const |

| Tensor | where (const Tensor &condition, const Tensor &other) const |

| Tensor | norm (c10::optional< Scalar > p, ScalarType dtype) const |

| Tensor | norm (Scalar p=2) const |

| Tensor | norm (c10::optional< Scalar > p, IntArrayRef dim, bool keepdim, ScalarType dtype) const |

| Tensor | norm (c10::optional< Scalar > p, IntArrayRef dim, bool keepdim=false) const |

| Tensor | clone () const |

| Tensor & | resize_as_ (const Tensor &the_template) |

| Tensor | pow (Scalar exponent) const |

| Tensor & | zero_ () |

| Tensor | sub (const Tensor &other, Scalar alpha=1) const |

| Tensor & | sub_ (const Tensor &other, Scalar alpha=1) |

| Tensor | sub (Scalar other, Scalar alpha=1) const |

| Tensor & | sub_ (Scalar other, Scalar alpha=1) |

| Tensor | addmm (const Tensor &mat1, const Tensor &mat2, Scalar beta=1, Scalar alpha=1) const |

| Tensor & | addmm_ (const Tensor &mat1, const Tensor &mat2, Scalar beta=1, Scalar alpha=1) |

| Tensor & | sparse_resize_ (IntArrayRef size, int64_t sparse_dim, int64_t dense_dim) |

| Tensor & | sparse_resize_and_clear_ (IntArrayRef size, int64_t sparse_dim, int64_t dense_dim) |

| Tensor | sparse_mask (SparseTensorRef mask) const |

| Tensor | to_dense () const |

| int64_t | sparse_dim () const |

| int64_t | _dimI () const |

| int64_t | dense_dim () const |

| int64_t | _dimV () const |

| int64_t | _nnz () const |

| Tensor | coalesce () const |

| bool | is_coalesced () const |

| Tensor | _indices () const |

| Tensor | _values () const |

| Tensor & | _coalesced_ (bool coalesced) |

| Tensor | indices () const |

| Tensor | values () const |

| int64_t | numel () const |

| std::vector< Tensor > | unbind (int64_t dim=0) const |

| Tensor | to_sparse (int64_t sparse_dim) const |

| Tensor | to_sparse () const |

| Tensor | to (const TensorOptions &options, bool non_blocking=false, bool copy=false) const |

| Tensor | to (Device device, ScalarType dtype, bool non_blocking=false, bool copy=false) const |

| Tensor | to (ScalarType dtype, bool non_blocking=false, bool copy=false) const |

| Tensor | to (const Tensor &other, bool non_blocking=false, bool copy=false) const |

| Scalar | item () const |

| void * | data_ptr () const |

| Tensor & | set_ (Storage source) |

| Tensor & | set_ (Storage source, int64_t storage_offset, IntArrayRef size, IntArrayRef stride={}) |

| Tensor & | set_ (const Tensor &source) |

| Tensor & | set_ () |

| bool | is_set_to (const Tensor &tensor) const |

| Tensor & | masked_fill_ (const Tensor &mask, Scalar value) |

| Tensor | masked_fill (const Tensor &mask, Scalar value) const |

| Tensor & | masked_fill_ (const Tensor &mask, const Tensor &value) |

| Tensor | masked_fill (const Tensor &mask, const Tensor &value) const |

| Tensor & | masked_scatter_ (const Tensor &mask, const Tensor &source) |

| Tensor | masked_scatter (const Tensor &mask, const Tensor &source) const |

| Tensor | view (IntArrayRef size) const |

| Tensor & | put_ (const Tensor &index, const Tensor &source, bool accumulate=false) |

| Tensor & | index_add_ (int64_t dim, const Tensor &index, const Tensor &source) |

| Tensor | index_add (int64_t dim, const Tensor &index, const Tensor &source) const |

| Tensor & | index_fill_ (int64_t dim, const Tensor &index, Scalar value) |

| Tensor | index_fill (int64_t dim, const Tensor &index, Scalar value) const |

| Tensor & | index_fill_ (int64_t dim, const Tensor &index, const Tensor &value) |

| Tensor | index_fill (int64_t dim, const Tensor &index, const Tensor &value) const |

| Tensor & | scatter_ (int64_t dim, const Tensor &index, const Tensor &src) |

| Tensor | scatter (int64_t dim, const Tensor &index, const Tensor &src) const |

| Tensor & | scatter_ (int64_t dim, const Tensor &index, Scalar value) |

| Tensor | scatter (int64_t dim, const Tensor &index, Scalar value) const |

| Tensor & | scatter_add_ (int64_t dim, const Tensor &index, const Tensor &src) |

| Tensor | scatter_add (int64_t dim, const Tensor &index, const Tensor &src) const |

| Tensor & | lt_ (Scalar other) |

| Tensor & | lt_ (const Tensor &other) |

| Tensor & | gt_ (Scalar other) |

| Tensor & | gt_ (const Tensor &other) |

| Tensor & | le_ (Scalar other) |

| Tensor & | le_ (const Tensor &other) |

| Tensor & | ge_ (Scalar other) |

| Tensor & | ge_ (const Tensor &other) |

| Tensor & | eq_ (Scalar other) |

| Tensor & | eq_ (const Tensor &other) |

| Tensor & | ne_ (Scalar other) |

| Tensor & | ne_ (const Tensor &other) |

| Tensor | __and__ (Scalar other) const |

| Tensor | __and__ (const Tensor &other) const |

| Tensor & | __iand__ (Scalar other) |

| Tensor & | __iand__ (const Tensor &other) |

| Tensor | __or__ (Scalar other) const |

| Tensor | __or__ (const Tensor &other) const |

| Tensor & | __ior__ (Scalar other) |

| Tensor & | __ior__ (const Tensor &other) |

| Tensor | __xor__ (Scalar other) const |

| Tensor | __xor__ (const Tensor &other) const |

| Tensor & | __ixor__ (Scalar other) |

| Tensor & | __ixor__ (const Tensor &other) |

| Tensor | __lshift__ (Scalar other) const |

| Tensor | __lshift__ (const Tensor &other) const |

| Tensor & | __ilshift__ (Scalar other) |

| Tensor & | __ilshift__ (const Tensor &other) |

| Tensor | __rshift__ (Scalar other) const |

| Tensor | __rshift__ (const Tensor &other) const |

| Tensor & | __irshift__ (Scalar other) |

| Tensor & | __irshift__ (const Tensor &other) |

| Tensor & | lgamma_ () |

| Tensor & | atan2_ (const Tensor &other) |

| Tensor & | tril_ (int64_t diagonal=0) |

| Tensor & | triu_ (int64_t diagonal=0) |

| Tensor & | digamma_ () |

| Tensor & | polygamma_ (int64_t n) |

| Tensor & | erfinv_ () |

| Tensor & | frac_ () |

| Tensor & | renorm_ (Scalar p, int64_t dim, Scalar maxnorm) |

| Tensor & | reciprocal_ () |

| Tensor & | neg_ () |

| Tensor & | pow_ (Scalar exponent) |

| Tensor & | pow_ (const Tensor &exponent) |

| Tensor & | lerp_ (const Tensor &end, Scalar weight) |

| Tensor & | lerp_ (const Tensor &end, const Tensor &weight) |

| Tensor & | sign_ () |

| Tensor & | fmod_ (Scalar other) |

| Tensor & | fmod_ (const Tensor &other) |

| Tensor & | remainder_ (Scalar other) |

| Tensor & | remainder_ (const Tensor &other) |

| Tensor & | addbmm_ (const Tensor &batch1, const Tensor &batch2, Scalar beta=1, Scalar alpha=1) |

| Tensor | addbmm (const Tensor &batch1, const Tensor &batch2, Scalar beta=1, Scalar alpha=1) const |

| Tensor & | addcmul_ (const Tensor &tensor1, const Tensor &tensor2, Scalar value=1) |

| Tensor & | addcdiv_ (const Tensor &tensor1, const Tensor &tensor2, Scalar value=1) |

| Tensor & | random_ (int64_t from, int64_t to, Generator *generator=nullptr) |

| Tensor & | random_ (int64_t to, Generator *generator=nullptr) |

| Tensor & | random_ (Generator *generator=nullptr) |

| Tensor & | uniform_ (double from=0, double to=1, Generator *generator=nullptr) |

| Tensor & | normal_ (double mean=0, double std=1, Generator *generator=nullptr) |

| Tensor & | cauchy_ (double median=0, double sigma=1, Generator *generator=nullptr) |

| Tensor & | log_normal_ (double mean=1, double std=2, Generator *generator=nullptr) |

| Tensor & | exponential_ (double lambd=1, Generator *generator=nullptr) |

| Tensor & | geometric_ (double p, Generator *generator=nullptr) |

| Tensor | diag (int64_t diagonal=0) const |

| Tensor | cross (const Tensor &other, int64_t dim=-1) const |

| Tensor | triu (int64_t diagonal=0) const |

| Tensor | tril (int64_t diagonal=0) const |

| Tensor | trace () const |

| Tensor | ne (Scalar other) const |

| Tensor | ne (const Tensor &other) const |

| Tensor | eq (Scalar other) const |

| Tensor | eq (const Tensor &other) const |

| Tensor | ge (Scalar other) const |

| Tensor | ge (const Tensor &other) const |

| Tensor | le (Scalar other) const |

| Tensor | le (const Tensor &other) const |

| Tensor | gt (Scalar other) const |

| Tensor | gt (const Tensor &other) const |

| Tensor | lt (Scalar other) const |

| Tensor | lt (const Tensor &other) const |

| Tensor | take (const Tensor &index) const |

| Tensor | index_select (int64_t dim, const Tensor &index) const |

| Tensor | masked_select (const Tensor &mask) const |

| Tensor | nonzero () const |

| Tensor | gather (int64_t dim, const Tensor &index, bool sparse_grad=false) const |

| Tensor | addcmul (const Tensor &tensor1, const Tensor &tensor2, Scalar value=1) const |

| Tensor | addcdiv (const Tensor &tensor1, const Tensor &tensor2, Scalar value=1) const |

| std::tuple< Tensor, Tensor > | gels (const Tensor &A) const |

| std::tuple< Tensor, Tensor > | trtrs (const Tensor &A, bool upper=true, bool transpose=false, bool unitriangular=false) const |

| std::tuple< Tensor, Tensor > | symeig (bool eigenvectors=false, bool upper=true) const |

| std::tuple< Tensor, Tensor > | eig (bool eigenvectors=false) const |

| std::tuple< Tensor, Tensor, Tensor > | svd (bool some=true, bool compute_uv=true) const |

| Tensor | cholesky (bool upper=false) const |

| Tensor | cholesky_solve (const Tensor &input2, bool upper=false) const |

| std::tuple< Tensor, Tensor > | solve (const Tensor &A) const |

| Tensor | potri (bool upper=true) const |

| std::tuple< Tensor, Tensor > | pstrf (bool upper=true, Scalar tol=-1) const |

| std::tuple< Tensor, Tensor > | qr () const |

| std::tuple< Tensor, Tensor > | geqrf () const |

| Tensor | orgqr (const Tensor &input2) const |

| Tensor | ormqr (const Tensor &input2, const Tensor &input3, bool left=true, bool transpose=false) const |

| std::tuple< Tensor, Tensor > | btrifact (bool pivot=true) const |

| std::tuple< Tensor, Tensor, Tensor > | btrifact_with_info (bool pivot=true) const |

| Tensor | btrisolve (const Tensor &LU_data, const Tensor &LU_pivots) const |

| Tensor | multinomial (int64_t num_samples, bool replacement=false, Generator *generator=nullptr) const |

| Tensor | lgamma () const |

| Tensor | digamma () const |

| Tensor | polygamma (int64_t n) const |

| Tensor | erfinv () const |

| Tensor | frac () const |

| Tensor | dist (const Tensor &other, Scalar p=2) const |

| Tensor | reciprocal () const |

| Tensor | neg () const |

| Tensor | atan2 (const Tensor &other) const |

| Tensor | lerp (const Tensor &end, Scalar weight) const |

| Tensor | lerp (const Tensor &end, const Tensor &weight) const |

| Tensor | histc (int64_t bins=100, Scalar min=0, Scalar max=0) const |

| Tensor | sign () const |

| Tensor | fmod (Scalar other) const |

| Tensor | fmod (const Tensor &other) const |

| Tensor | remainder (Scalar other) const |

| Tensor | remainder (const Tensor &other) const |

| Tensor | min (const Tensor &other) const |

| Tensor | min () const |

| Tensor | max (const Tensor &other) const |

| Tensor | max () const |

| Tensor | median () const |

| std::tuple< Tensor, Tensor > | sort (int64_t dim=-1, bool descending=false) const |

| Tensor | argsort (int64_t dim=-1, bool descending=false) const |

| std::tuple< Tensor, Tensor > | topk (int64_t k, int64_t dim=-1, bool largest=true, bool sorted=true) const |

| Tensor | all () const |

| Tensor | any () const |

| Tensor | renorm (Scalar p, int64_t dim, Scalar maxnorm) const |

| Tensor | unfold (int64_t dimension, int64_t size, int64_t step) const |

| bool | equal (const Tensor &other) const |

| Tensor | pow (const Tensor &exponent) const |

| Tensor | alias () const |

| Tensor | to (caffe2::TypeMeta type_meta, bool non_blocking=false, bool copy=false) const |

| Tensor | to (Device device, caffe2::TypeMeta type_meta, bool non_blocking=false, bool copy=false) const |

| template<typename F , typename... Args> | |

| auto | m (F func, Args &&...params) const -> decltype(func(*this, std::forward< Args >(params)...)) |

| Tensor (c10::intrusive_ptr< TensorImpl, UndefinedTensorImpl > tensor_impl) | |

| Tensor (const Tensor &)=default | |

| Tensor (Tensor &&)=default | |

| Tensor (C10Tensor tensor) | |

| operator C10Tensor () const & | |

| operator C10Tensor ()&& | |

| int64_t | dim () const |

| int64_t | storage_offset () const |

| TensorImpl * | unsafeGetTensorImpl () const |

| TensorImpl * | unsafeReleaseTensorImpl () |

| const c10::intrusive_ptr< TensorImpl, UndefinedTensorImpl > & | getIntrusivePtr () const |

| bool | defined () const |

| void | reset () |

| Tensor & | operator= (const Tensor &x)& |

| Tensor & | operator= (Tensor &&x)& |

| Tensor & | operator= (Scalar v)&& |

| Tensor & | operator= (const Tensor &)&& |

| Tensor & | operator= (Tensor &&)&& |

| bool | is_same (const Tensor &other) const noexcept |

| size_t | use_count () const noexcept |

| size_t | weak_use_count () const noexcept |

| const char * | toString () const |

| IntArrayRef | sizes () const |

| IntArrayRef | strides () const |

| int64_t | ndimension () const |

| bool | is_contiguous () const |

| size_t | nbytes () const |

| size_t | itemsize () const |

| size_t | element_size () const |

| Type & | type () const |

| TensorTypeId | type_id () const |

| ScalarType | scalar_type () const |

| bool | has_storage () const |

| const Storage & | storage () const |

| bool | is_alias_of (const at::Tensor &other) const |

| Tensor | toType (const Type &t, bool non_blocking=false) const |

| Tensor & | copy_ (const Tensor &src, bool non_blocking=false) |

| Tensor | toType (ScalarType t) const |

| Tensor | toBackend (Backend b) const |

| bool | is_variable () const noexcept |

Returns true if the Tensor is actually a torch::autograd::Variable. More... | |

| Layout | layout () const noexcept |

Returns a Tensor's layout. Defined in Type.h. | |

| caffe2::TypeMeta | dtype () const noexcept |

Returns a Tensor's dtype (TypeMeta). Defined in TensorMethods.h. | |

| Device | device () const |

Returns a Tensor's device. | |

| int64_t | get_device () const |

Returns a Tensor's device index. | |

| bool | is_cuda () const |

Returns if a Tensor has CUDA backend. | |

| bool | is_hip () const |

Returns if a Tensor has HIP backend. | |

| bool | is_sparse () const |

Returns if a Tensor has sparse backend. | |

| TensorOptions | options () const |

Returns the TensorOptions corresponding to this Tensor. More... | |

| template<typename T > | |

| T * | data () const |

| template<typename T > | |

| T | item () const |

| void | print () const |

| template<typename T , size_t N> | |

| TensorAccessor< T, N > | accessor () const & |

| template<typename T , size_t N> | |

| TensorAccessor< T, N > | accessor ()&&=delete |

| template<typename T , size_t N, template< typename U > class PtrTraits = DefaultPtrTraits, typename index_t = int64_t> | |

| PackedTensorAccessor< T, N, PtrTraits, index_t > | packed_accessor () const & |

| template<typename T , size_t N, template< typename U > class PtrTraits = DefaultPtrTraits, typename index_t = int64_t> | |

| PackedTensorAccessor< T, N > | packed_accessor ()&&=delete |

| Tensor | operator- () const |

| Tensor & | operator+= (const Tensor &other) |

| Tensor & | operator+= (Scalar other) |

| Tensor & | operator-= (const Tensor &other) |

| Tensor & | operator-= (Scalar other) |

| Tensor & | operator*= (const Tensor &other) |

| Tensor & | operator*= (Scalar other) |

| Tensor & | operator/= (const Tensor &other) |

| Tensor & | operator/= (Scalar other) |

| Tensor | operator[] (Scalar index) const |

| Tensor | operator[] (Tensor index) const |

| Tensor | operator[] (int64_t index) const |

| Tensor | cpu () const |

| Tensor | cuda () const |

| Tensor | hip () const |

| Tensor & | set_requires_grad (bool requires_grad) |

| bool | requires_grad () const |

| Tensor & | grad () |

| const Tensor & | grad () const |

| void | set_data (Tensor new_data) |

| void | backward (c10::optional< Tensor > gradient=c10::nullopt, bool keep_graph=false, bool create_graph=false) |

| Computes the gradient of current tensor w.r.t. graph leaves. | |

Data Fields | |

| std::string | name |

| Variable | grad_ |

| std::shared_ptr< Function > | grad_fn_ |

| std::weak_ptr< Function > | grad_accumulator_ |

| VariableVersion | version_counter_ |

| std::vector< std::shared_ptr< FunctionPreHook > > | hooks_ |

| bool | requires_grad_ |

| bool | is_view_ |

| uint32_t | output_nr_ |

| PyObject * | pyobj_ = nullptr |

| std::mutex | mutex_ |

| Variable | base_ |

The base Variable (never a view). | |

| uint32_t | attr_version |

| The value of the version_counter at the time grad_fn was created. More... | |

| at::Tensor | data_ |

| The underlying data tensor for this Variable. More... | |

Friends | |

| Variable | make_variable_view (Variable base, at::Tensor data, bool is_differentiable, bool allow_tensor_metadata_change, Edge gradient_edge) |

Creates a Variable that is a view of another (base) variable. More... | |

| Variable | make_variable (at::Tensor data, bool requires_grad, bool allow_tensor_metadata_change) |

Creates a Variable from the given Tensor, copying its underlying TensorImpl. More... | |

| Variable | make_variable_consuming (at::Tensor data, bool requires_grad, bool allow_tensor_metadata_change) |

Creates a Variable from the given Tensor, consuming its underlying TensorImpl. More... | |

| Variable | make_variable (at::Tensor data, Edge gradient_edge, bool allow_tensor_metadata_change) |

Creates a Variable from the given Tensor and specify a gradient_edge, i.e. More... | |

Additional Inherited Members | |

Static Public Member Functions inherited from at::Tensor Static Public Member Functions inherited from at::Tensor | |

| static Tensor | wrap_tensor_impl (c10::intrusive_ptr< TensorImpl, UndefinedTensorImpl > tensor_impl) |

| static Tensor | wrap_tensor_impl (c10::intrusive_ptr< TensorImpl, UndefinedTensorImpl > tensor_impl) |

Protected Member Functions inherited from at::Tensor Protected Member Functions inherited from at::Tensor | |

| void | enforce_invariants () |

| void | enforce_invariants () |

Protected Attributes inherited from at::Tensor Protected Attributes inherited from at::Tensor | |

| c10::intrusive_ptr< TensorImpl, UndefinedTensorImpl > | impl_ |

A Variable augments a Tensor with the ability to interact in our autograd machinery.

NOTE [ Autograd View Variables ].

Each Variable has one unique AutogradMeta struct, which stores autograd metadata fields that are necessary for tracking the Variable's autograd history.

Conceptually, Variables travel along Edges between Functions in the autograd graph. A Variable can either be a leaf, like a weight in a neural network, or an interior variable, when it is the result of an operation between variables. Every Variable also stores another Variable called its grad (gradient). If the variable is a leaf, its gradient will be accumulated into this variable.

Gradient Edges

Another major feature of Variables are versions. Versions are incremented when an in-place mutation of a variable occurs. Versions are useful when constructing SavedVariables, which take a snapshot of a Variable at a certain version. You can retrieve a Variable's version through its current_version() method.

Views

Variable inherits from Tensor and thus its API is a superset of that of Tensor. This means you can perform all the usual mathematical and other operations you can perform on Tensors also on Variables. Furthermore, Variable and Tensor actually convert implicitly between each other. You can thus call functions defined on Tensors also with Variables. For this, the Variable class allows implicit construction from Tensor. It is the responsibility of calling code to ensure that this constructor is invoked only when the Tensor's dynamic type is actually Variable. Most notably, it is not correct to construct a brand new Variable from a Tensor using this constructor. To do so, you must use the make_variable free function instead. To create a view variable, use make_variable_view. ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

Many operations return Variable that shares storage with an input Variable. The returned Variable is called a view Variable on the input base Variable.

In PyTorch, we have two types of views: differentiable views, and non-differentiable views. In either type, to support proper version checking, the base and view Variables must always share the same version_counter.

Differentiable Views

In certain cases, although function outputs share storage with inputs, they will never require gradient history tracking. Instead of registering the view relation via DifferentiableViewImpl in autograd, the views will be using usual Variable::Impl and just share the version counters with the base Variables. Such views include:

sparse_tensor.indices() is a integral view on a (possibly) floating point tensor. See top of derivatives.yaml on how to specify that outputs of a function are non-differentiable. These are called non-differentiable views as the gradients do not flow through the view relation. Relevant logic for non-differentiable views is implemented in make_variable_view below, and wrap_output of gen_variable_type.py. Definition at line 85 of file variable.h.

|

inline |

Returns the Variable that this Variable is a view of.

If this Variable is not a view, throw a std::runtime_error.

Definition at line 738 of file variable.h.

|

inlinenoexcept |

Retrieves the current value of the Variable's version counter.

Equivalent to calling version_counter().current_version().

Definition at line 707 of file variable.h.

|

inline |

Returns a copy of this Variable that is detached from its autograd graph and has a blank version.

This method is OK to call if the Variable is a view. NOTE: Previously, if we change the tensor metadata (e.g. sizes / strides / storage / storage_offset) of a tensor created from detach(), those metadata in the original tensor will also be updated. However, the new behavior is that those metadata changes to the detached tensor will not update the original tensor anymore, and in the detach() function we need to set allow_tensor_metadata_change_ to false to make such changes explicitly illegal, in order to prevent users from changing metadata of the detached tensor and expecting the original tensor to also be updated.

Definition at line 673 of file variable.h.

| void torch::autograd::Variable::detach_ | ( | ) |

| std::shared_ptr< Function > torch::autograd::Variable::grad_accumulator | ( | ) | const |

Gets the gradient accumulator of the Variable if it has one, or else create one on the fly and return it.

Definition at line 111 of file variable.cpp.

| const std::shared_ptr< Function > & torch::autograd::Variable::grad_fn | ( | ) | const |

Gets the gradient function of the Variable.

If this is a leaf variable, the pointer returned will be null.

For View Variables: Gets the up-to-date grad_fn. If the shared data or base was modified, we re-create the grad_fn to express the up-to-date view relationship between this and the base Variable.

Definition at line 201 of file variable.cpp.

|

inline |

Returns the "canonical" gradient edge of this Variable, i.e.

either the gradient function if this is an interior Variable, or the gradient accumulator otherwise. If the Variable is interior, the returned Edge will store the input index of the Function to which this variable is connected in its input_nr field. For leaves, the input_nr is always zero. Note that set_gradient_edge and gradient_edge are not symmetric. You must use set_gradient_edge to set the grad_fn and set_grad_accumulator to set the accumulator.

Definition at line 195 of file variable.h.

|

inlinenoexcept |

Returns the input index of the gradient Function to which this Variable is connected.

Note: input indexes of the gradient Function correspond to output indexes of the corresponding forward Function.

Definition at line 687 of file variable.h.

| void torch::autograd::Variable::rebase_history | ( | Edge | gradient_edge | ) |

Update the grad_fn of an existing Variable.

Called after in-place modifications.

For View Variables: Called after in-place modifications. Modifies the grad_fn of the base Variable.

Definition at line 236 of file variable.cpp.

|

inline |

Sets the Tensor held by this Variable to the one supplied.

It is rarely necessary to call this; it's used, for example, when a non-sparse gradient gets added to a sparse gradient, requiring the type of the gradient Variable to become non-sparse.

Definition at line 678 of file variable.h.

|

inline |

Set the gradient accumulator of the Variable.

This is only applicable to leaf variables. Interior variables should call set_gradient_edge().

Definition at line 664 of file variable.h.

|

inlinenoexcept |

Set the gradient edge – i.e.

grad_fn and input_nr – of the Variable. NOTE: This will always set the grad_fn, even if this is a leaf variable, and never the grad_accumulator. For the latter, use set_grad_accumulator. This allows late construction of an interior Variable.

Definition at line 682 of file variable.h.

|

inlineoverride |

Sets the requires_grad property of Variable.

This should be true for leaf variables that want to accumulate gradients, and false for all other variables.

Definition at line 361 of file variable.h.

|

inline |

Attempts to get a pointer to the gradient accumulator of the Variable, if it still exists.

If the gradient accumulator function has been destroyed, returns a nullptr.

Definition at line 669 of file variable.h.

|

friend |

Creates a Variable from the given Tensor, copying its underlying TensorImpl.

requires_grad should be set only for leaves, and determines whether the Variable will accumulate gradients. NOTE: data must not be a Variable already. Its dynamic type must be Tensor.

Definition at line 577 of file variable.h.

|

friend |

Creates a Variable from the given Tensor and specify a gradient_edge, i.e.

a (function, input_nr) pair specifying the function in the autograd graph, and what particular input of that function, this variable is connected to.

Definition at line 610 of file variable.h.

|

friend |

Creates a Variable from the given Tensor, consuming its underlying TensorImpl.

This is intended to be used from functions that immediately create a Tensor, convert it to a Variable, and then free it; it has been found to decrease the overhead of those operations, in some situations. The comments about requires_grad and data on the above version also apply to this one.

Definition at line 594 of file variable.h.

|

friend |

Creates a Variable that is a view of another (base) variable.

The gradient_edge is an optional (gradient_function, input_number) pair. is_differentiable is a bool that specifies whether this view is differentiable, i.e., whether the relation should be tracked by autograd. See NOTE [ Autograd View Variables ] for details.

Setting allow_tensor_metadata_change_ to false by default would unnecessarily prevent those changes from happening and is undesirable.

Differentiable view. Track history with DifferentiableViewImpl.

Non-differentiable view. Just share version counter.

Definition at line 547 of file variable.h.

| uint32_t torch::autograd::Variable::attr_version |

The value of the version_counter at the time grad_fn was created.

The grad_fn field is stale if attr_version != version_counter.current_version().

Definition at line 393 of file variable.h.

| at::Tensor torch::autograd::Variable::data_ |

The underlying data tensor for this Variable.

This field will be removed once VariableImpl and TensorImpl are merged.

Definition at line 442 of file variable.h.

1.8.11

1.8.11