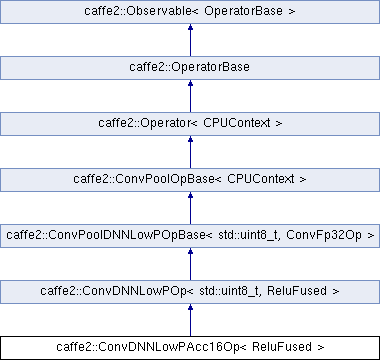

Quantized Conv operator with 16-bit accumulation. More...

#include <conv_dnnlowp_acc16_op.h>

Public Types | |

| using | BaseType = ConvDNNLowPOp< std::uint8_t, ReluFused > |

Public Types inherited from caffe2::Observable< OperatorBase > Public Types inherited from caffe2::Observable< OperatorBase > | |

| using | Observer = ObserverBase< OperatorBase > |

Public Member Functions | |

| USE_CONV_POOL_BASE_FUNCTIONS (CPUContext) | |

| ConvDNNLowPAcc16Op (const OperatorDef &operator_def, Workspace *ws) | |

| template<fbgemm::QuantizationGranularity Q_GRAN> | |

| void | DispatchFBGEMM_ (fbgemm::PackAWithRowOffset< uint8_t, int16_t > &packA, const uint8_t *col_buffer_data, vector< int32_t > *Y_int32, uint8_t *Y_uint8_data) |

Public Member Functions inherited from caffe2::ConvDNNLowPOp< std::uint8_t, ReluFused > Public Member Functions inherited from caffe2::ConvDNNLowPOp< std::uint8_t, ReluFused > | |

| USE_CONV_POOL_BASE_FUNCTIONS (CPUContext) | |

| USE_CONV_POOL_DNNLOWP_OPERATOR_BASE_FUNCTIONS (std::uint8_t, ConvFp32Op) | |

| ConvDNNLowPOp (const OperatorDef &operator_def, Workspace *ws) | |

Public Member Functions inherited from caffe2::ConvPoolDNNLowPOpBase< std::uint8_t, ConvFp32Op > Public Member Functions inherited from caffe2::ConvPoolDNNLowPOpBase< std::uint8_t, ConvFp32Op > | |

| USE_CONV_POOL_BASE_FUNCTIONS (CPUContext) | |

| ConvPoolDNNLowPOpBase (const OperatorDef &operator_def, Workspace *ws) | |

Public Member Functions inherited from caffe2::ConvPoolOpBase< CPUContext > Public Member Functions inherited from caffe2::ConvPoolOpBase< CPUContext > | |

| ConvPoolOpBase (const OperatorDef &operator_def, Workspace *ws) | |

| vector< int > | GetDims (const Tensor &input) |

| int | GetDimsSize (const Tensor &input) |

| std::vector< int64_t > | GetOutputSize (const Tensor &input, int output_channel) |

| void | SetOutputSize (const Tensor &input, Tensor *output, int output_channel) |

| void | ComputePads (const vector< int > &dims) |

| bool | HasPad () const |

| bool | HasStride () const |

| void | SetDeviceTensor (const std::vector< int > &data, Tensor *tensor) |

| void | SetBiasMultiplier (const int size, Tensor *bias_multiplier_) |

| bool | RunOnDevice () override |

Public Member Functions inherited from caffe2::Operator< CPUContext > Public Member Functions inherited from caffe2::Operator< CPUContext > | |

| Operator (const OperatorDef &operator_def, Workspace *ws) | |

| Operator (const c10::FunctionSchema &fn_schema, std::vector< c10::IValue > inputs, std::vector< at::Tensor > outputs) | |

| const Tensor & | Input (int idx, DeviceType type=CPUContext::GetDeviceType()) |

| Retrieve a non-owning reference to the input at position 'idx' for this operator. More... | |

| Tensor | XOutput (int idx, at::IntArrayRef dims, at::TensorOptions options) |

| XOutput is a modernized version of Output which returns a Tensor rather than a Tensor* (the raw pointer in the latter case is useless, as Tensor is a pointer type.) | |

Public Member Functions inherited from caffe2::OperatorBase Public Member Functions inherited from caffe2::OperatorBase | |

| OperatorBase (const OperatorDef &operator_def, Workspace *ws) | |

| OperatorBase (const c10::FunctionSchema &schema, std::vector< c10::IValue > inputs, std::vector< at::Tensor > outputs) | |

| bool | isLegacyOperator () const |

| Return true if the operator was instantiated with OperatorDef New operators should be instantiated with FunctionSchema. | |

| const c10::FunctionSchema & | getFunctionSchema () const |

| bool | HasArgument (const string &name) const |

| Checks if the operator has an argument of the given name. | |

| template<typename T > | |

| T | GetSingleArgument (const string &name, const T &default_value) const |

| template<typename T > | |

| bool | HasSingleArgumentOfType (const string &name) const |

| template<typename T > | |

| vector< T > | GetVectorFromIValueList (const c10::IValue &value) const |

| template<typename T > | |

| vector< T > | GetRepeatedArgument (const string &name, const vector< T > &default_value={}) const |

| template<typename T > | |

| const T & | Input (int idx) |

| template<typename T > | |

| const T & | Input (int idx, DeviceType type) |

| template<typename T > | |

| T * | Output (int idx) |

| template<typename T > | |

| T * | Output (int idx, DeviceType type) |

| Tensor | XOutputTensor (int idx, at::IntArrayRef dims, at::TensorOptions options) |

| void | SetOutputTensor (int idx, Tensor tensor) |

| Tensor | OutputTensorOrUndefined (int idx) |

| Tensor * | OutputTensor (int idx, at::IntArrayRef dims, at::TensorOptions options) |

| Tensor * | OutputTensorCopyFrom (int idx, at::TensorOptions options, const Tensor &src, bool async=false) |

| Tensor * | OutputTensorAlias (int idx, const Tensor &src) |

| template<typename T > | |

| T * | Output (int idx, T *allocated) |

| const Blob & | InputBlob (int idx) |

| Blob * | OutputBlob (int idx) |

| bool | IsInputOutputAlias (int i, int j) |

| template<typename T > | |

| bool | InputIsType (int idx) |

| bool | InputIsTensorType (int idx, DeviceType device_type) |

| template<typename T > | |

| bool | OutputIsType (int idx) |

| bool | OutputIsTensorType (int idx, DeviceType type) |

| int | InputSize () const |

| int | OutputSize () const |

| const vector< const Blob * > & | Inputs () const |

| const vector< Blob * > & | Outputs () |

| vector< TensorShape > | InputTensorShapes () const |

| virtual void | WaitEvent (const Event &ev, int=-1) |

| void | Wait (const OperatorBase &other, int stream_id=-1) |

| virtual void | WaitEvents (const std::vector< const Event * > &events, int=-1) |

| virtual void | Finish () |

| virtual bool | Run (int=0) |

| virtual bool | HasAsyncPart () const |

| virtual bool | SupportsAsyncScheduling () const |

| virtual bool | RunAsync (int stream_id=0) |

| virtual void | AddRelatedBlobInfo (EnforceNotMet *err) |

| const OperatorDef & | debug_def () const |

| void | set_debug_def (const std::shared_ptr< const OperatorDef > &operator_def) |

| bool | has_debug_def () const |

| void | RecordLastFailedOpNetPosition () |

| int | net_position () const |

| void | set_net_position (int idx) |

| const DeviceOption & | device_option () const |

| const Event & | event () const |

| Event & | event () |

| void | ResetEvent () |

| void | DisableEvent () |

| bool | IsEventDisabled () const |

| virtual void | SyncDeviceBarrierForObservers () |

| virtual bool | IsStreamFree (int) const |

| const std::string & | type () const |

| void | annotate_engine (const std::string &engine) |

| const std::string & | engine () const |

| void | SetExecutorHelper (ExecutorHelper *helper) |

| ExecutorHelper * | GetExecutorHelper () const |

| std::vector< at::Tensor > | move_newstyle_outputs ()&& |

| template<> | |

| NetDef | GetSingleArgument (const std::string &name, const NetDef &default_value) const |

| template<> | |

| vector< int > | GetVectorFromIValueList (const c10::IValue &value) const |

| template<> | |

| vector< float > | GetVectorFromIValueList (const c10::IValue &value) const |

| template<> | |

| vector< string > | GetVectorFromIValueList (const c10::IValue &value) const |

Public Member Functions inherited from caffe2::Observable< OperatorBase > Public Member Functions inherited from caffe2::Observable< OperatorBase > | |

| Observable (Observable &&)=default | |

| Observable & | operator= (Observable &&)=default |

| C10_DISABLE_COPY_AND_ASSIGN (Observable) | |

| const Observer * | AttachObserver (std::unique_ptr< Observer > observer) |

| std::unique_ptr< Observer > | DetachObserver (const Observer *observer_ptr) |

| Returns a unique_ptr to the removed observer. More... | |

| virtual size_t | NumObservers () |

| void | StartAllObservers () |

| void | StopAllObservers () |

Additional Inherited Members | |

Static Public Member Functions inherited from caffe2::ConvPoolOpBase< CPUContext > Static Public Member Functions inherited from caffe2::ConvPoolOpBase< CPUContext > | |

| static void | InferOutputSize (const at::IntArrayRef &input_dims, const int output_channel, const StorageOrder order, const bool global_pooling, const LegacyPadding legacy_pad, const std::vector< int > &dilation, const std::vector< int > &stride, std::vector< int > *kernel, std::vector< int > *pads, std::vector< int > *output_dims) |

| static void | InferOutputSize64 (const at::IntList &input_dims, const int output_channel, const StorageOrder order, const bool global_pooling, const LegacyPadding legacy_pad, const std::vector< int > &dilation, const std::vector< int > &stride, std::vector< int > *kernel, std::vector< int > *pads, std::vector< int64_t > *output_dims) |

| static struct OpSchema::Cost | CostInferenceForConv (const OperatorDef &def, const vector< TensorShape > &inputs) |

| static vector< TensorShape > | TensorInferenceForSchema (const OperatorDef &def, const vector< TensorShape > &in, int output_channel) |

| static std::vector< TensorShape > | TensorInferenceForConv (const OperatorDef &def, const std::vector< TensorShape > &in) |

| static std::vector< TensorShape > | TensorInferenceForPool (const OperatorDef &def, const std::vector< TensorShape > &in) |

| static std::vector< TensorShape > | TensorInferenceForLC (const OperatorDef &def, const std::vector< TensorShape > &in) |

Data Fields inherited from caffe2::ConvPoolOpBase< CPUContext > Data Fields inherited from caffe2::ConvPoolOpBase< CPUContext > | |

| USE_OPERATOR_CONTEXT_FUNCTIONS | |

Static Public Attributes inherited from caffe2::OperatorBase Static Public Attributes inherited from caffe2::OperatorBase | |

| static const int | kNoNetPositionSet = -1 |

Protected Types inherited from caffe2::ConvDNNLowPOp< std::uint8_t, ReluFused > Protected Types inherited from caffe2::ConvDNNLowPOp< std::uint8_t, ReluFused > | |

| using | T_signed = typename std::make_signed< std::uint8_t >::type |

Protected Member Functions inherited from caffe2::ConvDNNLowPOp< std::uint8_t, ReluFused > Protected Member Functions inherited from caffe2::ConvDNNLowPOp< std::uint8_t, ReluFused > | |

| bool | RunOnDeviceWithOrderNCHW () override |

| bool | RunOnDeviceWithOrderNHWC () override |

| bool | GetQuantizationParameters_ () |

| bool | IsConvGEMM_ () const |

| bool | NoIm2ColNHWC_ () |

| int | KernelDim_ () |

| const std::uint8_t * | Im2ColNHWC_ (Tensor *col_buffer) |

| dnnlowp::TensorQuantizationParams & | FilterQuantizationParams (int group_id) |

| dnnlowp::RequantizationParams & | RequantizationParams (int group_id) |

| INPUT_TAGS (INPUT, FILTER, BIAS) | |

| void | RunOnDeviceEpilogueNCHW_ (const std::uint8_t *col_buffer_data, std::int32_t *Y_int32, std::uint8_t *Y_data, std::size_t i_offset, int group_id) |

| void | RunOnDeviceEpilogueNHWC_ (const std::uint8_t *col_buffer_data, std::int32_t *Y_int32) |

Protected Member Functions inherited from caffe2::ConvPoolDNNLowPOpBase< std::uint8_t, ConvFp32Op > Protected Member Functions inherited from caffe2::ConvPoolDNNLowPOpBase< std::uint8_t, ConvFp32Op > | |

| const TensorCPU & | InputTensorCPU_ (int idx) |

| TensorCPU * | OutputTensorCPU_ (int idx) |

| Tensor * | OutputTensorCPU_ (int idx, at::IntList dims, at::TensorOptions options) |

| std::uint8_t * | GetQuantizedOutputData_ () |

| void | MeasureQuantizationError_ () |

| void | RunOnDeviceEpilogue_ () |

| void | ParseDNNLowPOperatorArguments_ () |

| void | GetOutputQuantizationParams_ () |

| OpWrapper< ConvFp32Op, std::uint8_t > * | Fp32Op_ () |

| void | CreateSharedInt32Buffer_ () |

| void | RunWithSharedBuffer_ (Tensor *col_buffer, vector< int32_t > *Y_int32, std::function< void(Tensor *col_buffer_shared, vector< int32_t > *Y_int32_shared)> f) |

Protected Member Functions inherited from caffe2::ConvPoolOpBase< CPUContext > Protected Member Functions inherited from caffe2::ConvPoolOpBase< CPUContext > | |

| int | pad_t () const |

| int | pad_l () const |

| int | pad_b () const |

| int | pad_r () const |

| int | kernel_h () const |

| int | kernel_w () const |

| int | stride_h () const |

| int | stride_w () const |

| int | dilation_h () const |

| int | dilation_w () const |

Protected Member Functions inherited from caffe2::OperatorBase Protected Member Functions inherited from caffe2::OperatorBase | |

| virtual void | RecordEvent (const char *=nullptr) |

| void | SetEventFinished (const char *err_msg=nullptr) |

| void | SetEventFinishedWithException (const char *err_msg=nullptr) |

| std::string | getErrorMsg () |

| C10_DISABLE_COPY_AND_ASSIGN (OperatorBase) | |

Static Protected Member Functions inherited from caffe2::ConvDNNLowPOp< std::uint8_t, ReluFused > Static Protected Member Functions inherited from caffe2::ConvDNNLowPOp< std::uint8_t, ReluFused > | |

| static void | PartitionGroupedNHWCConv_ (int *group_begin, int *group_end, int *i_begin, int *i_end, int num_groups, int m, int nthreads, int thread_id) |

Static Protected Member Functions inherited from caffe2::ConvPoolOpBase< CPUContext > Static Protected Member Functions inherited from caffe2::ConvPoolOpBase< CPUContext > | |

| static void | ComputeSizeAndPad (const int in_size, const int stride, const int kernel, const int dilation, LegacyPadding legacy_pad, int *pad_head, int *pad_tail, int *out_size) |

| static void | ComputeSizeAndPad64 (const int in_size, const int stride, const int kernel, const int dilation, LegacyPadding legacy_pad, int *pad_head, int *pad_tail, int64_t *out_size) |

Protected Attributes inherited from caffe2::ConvDNNLowPOp< std::uint8_t, ReluFused > Protected Attributes inherited from caffe2::ConvDNNLowPOp< std::uint8_t, ReluFused > | |

| Tensor | col_buffer_ |

| Tensor | img_shape_device_ |

| Tensor | col_buffer_shape_device_ |

| std::vector< T_signed > | W_quantized_ |

| std::shared_ptr< std::vector< std::int32_t > > | column_offsets_ |

| std::vector< std::int32_t > | row_offsets_ |

| const std::int32_t * | b_quantized_data_ |

| std::vector< std::uint8_t > | X_pack_buf_ |

| std::vector< std::int32_t > | Y_int32_ |

| std::vector< dnnlowp::TensorQuantizationParams > | filter_qparams_ |

| std::vector< std::int32_t > | filter_zero_points_ |

| std::vector< float > | requantization_multipliers_ |

| bool | quantize_groupwise_ |

Protected Attributes inherited from caffe2::ConvPoolDNNLowPOpBase< std::uint8_t, ConvFp32Op > Protected Attributes inherited from caffe2::ConvPoolDNNLowPOpBase< std::uint8_t, ConvFp32Op > | |

| bool | measure_quantization_error_ |

| std::string | followed_by_ |

| std::vector< dnnlowp::TensorQuantizationParams > | in_qparams_ |

| dnnlowp::TensorQuantizationParams | out_qparams_ |

| std::unique_ptr< OpWrapper< ConvFp32Op, std::uint8_t > > | fp32_op_ |

| std::unique_ptr< dnnlowp::QuantizationFactory > | qfactory_ |

| std::vector< std::uint8_t > | out_temp_ |

| dnnlowp::QuantizationErrorStats | quantization_error_stats_ |

| bool | arguments_parsed_ |

Protected Attributes inherited from caffe2::ConvPoolOpBase< CPUContext > Protected Attributes inherited from caffe2::ConvPoolOpBase< CPUContext > | |

| LegacyPadding | legacy_pad_ |

| bool | global_pooling_ |

| vector< int > | kernel_ |

| vector< int > | dilation_ |

| vector< int > | stride_ |

| vector< int > | pads_ |

| bool | float16_compute_ |

| int | group_ |

| StorageOrder | order_ |

| bool | shared_buffer_ |

| Workspace * | ws_ |

Protected Attributes inherited from caffe2::OperatorBase Protected Attributes inherited from caffe2::OperatorBase | |

| std::unique_ptr< Event > | event_ |

Protected Attributes inherited from caffe2::Observable< OperatorBase > Protected Attributes inherited from caffe2::Observable< OperatorBase > | |

| std::vector< std::unique_ptr< Observer > > | observers_list_ |

Quantized Conv operator with 16-bit accumulation.

We'll encounter saturation but this will be faster in Intel CPUs

Definition at line 13 of file conv_dnnlowp_acc16_op.h.

1.8.11

1.8.11